I have an energy spectrum from a cosmic ray detector. The spectrum follows an exponential curve but it will have broad (and maybe very slight) lumps in it. The data, obviously, contains an element of noise.

I'm trying to smooth out the data and then plot its gradient. So far I've been using the scipy sline function to smooth it and then the np.gradient().

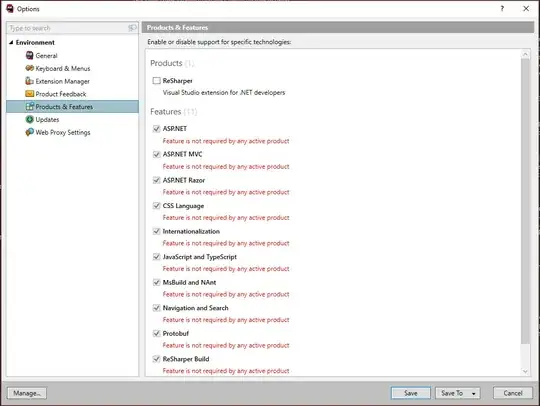

As you can see from the picture, the gradient function's method is to find the differences between each point, and it doesn't show the lumps very clearly.

I basically need a smooth gradient graph. Any help would be amazing!

I've tried 2 spline methods:

def smooth_data(y,x,factor):

print "smoothing data by interpolation..."

xnew=np.linspace(min(x),max(x),factor*len(x))

smoothy=spline(x,y,xnew)

return smoothy,xnew

def smooth2_data(y,x,factor):

xnew=np.linspace(min(x),max(x),factor*len(x))

f=interpolate.UnivariateSpline(x,y)

g=interpolate.interp1d(x,y)

return g(xnew),xnew

edit: Tried numerical differentiation:

def smooth_data(y,x,factor):

print "smoothing data by interpolation..."

xnew=np.linspace(min(x),max(x),factor*len(x))

smoothy=spline(x,y,xnew)

return smoothy,xnew

def minim(u,f,k):

""""functional to be minimised to find optimum u. f is original, u is approx"""

integral1=abs(np.gradient(u))

part1=simps(integral1)

part2=simps(u)

integral2=abs(part2-f)**2.

part3=simps(integral2)

F=k*part1+part3

return F

def fit(data_x,data_y,denoising,smooth_fac):

smy,xnew=smooth_data(data_y,data_x,smooth_fac)

y0,xnnew=smooth_data(smy,xnew,1./smooth_fac)

y0=list(y0)

data_y=list(data_y)

data_fit=fmin(minim, y0, args=(data_y,denoising), maxiter=1000, maxfun=1000)

return data_fit

However, it just returns the same graph again!