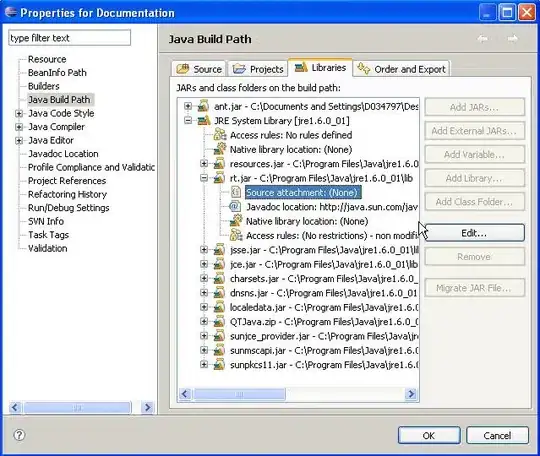

Front face on the left; right face on the right

Back face on the left; left face on the right

After some debugging, I've come to a couple conclusions:

Face culling (turned off in the photos) appears to make it worse.

Depth Buffering (turned on in the photos) appears to do little to help.

The face culls used glFrontFace( GL_CW ) | glFrontFace( GL_CCW ) in combination with glCullFace( GL_BACK_FACE ).

Update

Here is the result from the code snippet offered by Need4Sleep:

(note: the GL_LESS depth comparison didn't appear to change anything on its own)

Front face on left; right face on right - face culling turned on

Code Overview

The vertices and colors are ordered as vertex, color in a struct, known as simdColorVertex_t, where the both the color and the vertex components of the struct consist of 4 floats, each, in their own respective arrays:

typedef float simdVec4_t[ 4 ];

typedef struct simdColorVert4_s

{

simdVec4_t position;

simdVec4_t color;

}

simdColorVert4_t;

ColorCube class

The constructor creates its respective program. Then the vertex and index data are specified, and bound to their respective buffers:

(shader program creation omitted for brevity)

const float S = 0.5f;

const simdColorVert4_t vertices[] =

{

/*! Positions */ /*! Colors */ /*! Indices */

{ { S, S, S, 1.0f }, { 0.0f, 0.0f, 1.0f, 1.0f } }, //! 0

{ { -S, S, S, 1.0f }, { 1.0f, 0.0f, 0.0f, 1.0f } }, //! 1

{ { -S, -S, S, 1.0f }, { 0.0f, 1.0f, 0.0f, 1.0f } }, //! 2

{ { S, -S, S, 1.0f }, { 1.0f, 1.0f, 0.0f, 1.0f } }, //! 3

{ { S, S, -S, 1.0f }, { 1.0f, 1.0f, 1.0f, 1.0f } }, //! 4

{ { -S, S, -S, 1.0f }, { 1.0f, 0.0f, 0.0f, 1.0f } }, //! 5

{ { -S, -S, -S, 1.0f }, { 1.0f, 0.0f, 1.0f, 1.0f } }, //! 6

{ { S, -S, -S, 1.0f }, { 0.0f, 0.0f, 1.0f, 1.0f } } //! 7

};

const GLubyte indices[] =

{

1, 0, 2, 2, 0, 3, //! Front Face

3, 6, 4, 4, 0, 3, //! Right Face

3, 6, 2, 2, 6, 7, //! Bottom Face

7, 6, 4, 4, 5, 7, //! Back Face

7, 5, 2, 2, 1, 5, //! Left Face

5, 1, 4, 4, 0, 1, //! Top Face

};

//! The prefix BI_* denotes an enum, standing for "Buffer Index"

{

glBindBuffer( GL_ARRAY_BUFFER, mBuffers[ BI_ARRAY_BUFFER ] );

glBufferData( GL_ARRAY_BUFFER, sizeof( vertices ), vertices, GL_DYNAMIC_DRAW );

glBindBuffer( GL_ARRAY_BUFFER, 0 );

glBindBuffer( GL_ELEMENT_ARRAY_BUFFER, mBuffers[ BI_ELEMENT_BUFFER ] );

glBufferData( GL_ELEMENT_ARRAY_BUFFER, sizeof( indices ), indices, GL_DYNAMIC_DRAW );

glBindBuffer( GL_ELEMENT_ARRAY_BUFFER, 0 );

}

From there (still in the constructor), the vertex array is created and bound, along with its respective attribute and buffer data:

glBindVertexArray( mVertexArray );

glBindBuffer( GL_ARRAY_BUFFER, mBuffers[ BI_ARRAY_BUFFER ] );

glEnableVertexAttribArray( 0 );

glEnableVertexAttribArray( 1 );

glVertexAttribPointer( 0, 4, GL_FLOAT, GL_FALSE, sizeof( float ) * 8, ( void* ) offsetof( simdColorVert4_t, simdColorVert4_t::position ) );

glVertexAttribPointer( 1, 4, GL_FLOAT, GL_FALSE, sizeof( float ) * 8, ( void* ) offsetof( simdColorVert4_t, simdColorVert4_t::color ) );

glBindBuffer( GL_ELEMENT_ARRAY_BUFFER, mBuffers[ BI_ELEMENT_BUFFER ] );

glBindVertexArray( 0 );

Misc

Before the cube is initialized, this function is called:

void MainScene::setupGL( void )

{

glEnable( GL_DEPTH_TEST );

glDepthFunc( GL_LEQUAL );

glDepthRange( 0.0f, 1.0f );

glClearDepth( 1.0f );

int width, height;

gvGetWindowSize( &width, &height );

glViewport( 0, 0, width, height );

}

And the depth buffer is cleared before the mCube->draw(...) function is called.

I think it's obvious I'm doing something wrong here, but I'm not sure what it could be. After messing about with back-face culling and moving between counter-clockwise and clockwise winding orders for the front-face, it's only made things worse. Any ideas?