I want to use numpy.polyfit for physical calculations, therefore I need the magnitude of the error.

- 5,990

- 6

- 39

- 79

- 6,624

- 6

- 36

- 57

2 Answers

If you specify full=True in your call to polyfit, it will include extra information:

>>> x = np.arange(100)

>>> y = x**2 + 3*x + 5 + np.random.rand(100)

>>> np.polyfit(x, y, 2)

array([ 0.99995888, 3.00221219, 5.56776641])

>>> np.polyfit(x, y, 2, full=True)

(array([ 0.99995888, 3.00221219, 5.56776641]), # coefficients

array([ 7.19260721]), # residuals

3, # rank

array([ 11.87708199, 3.5299267 , 0.52876389]), # singular values

2.2204460492503131e-14) # conditioning threshold

The residual value returned is the sum of the squares of the fit errors, not sure if this is what you are after:

>>> np.sum((np.polyval(np.polyfit(x, y, 2), x) - y)**2)

7.1926072073491056

In version 1.7 there is also a cov keyword that will return the covariance matrix for your coefficients, which you could use to calculate the uncertainty of the fit coefficients themselves.

- 65,696

- 17

- 124

- 159

-

6do you know if np.polyfit uses TLS (Total Least Squares also known as Orthogonal Least Squares), or OLS (Ordinary Least Squares) to find the best fit? – Oliver Angelil Oct 13 '16 at 19:57

-

`np.polyfit` uses the Ordinary least Squares algorithm using `np.linalg.lstsq` similar to `scipy.linalg.lstsq`. `scipy.odr` implements the Orthogonal Least Squares algorithm, or more precisely the Orthogonal Distance Regression. – Cibin Joseph Jan 24 '21 at 08:56

As you can see in the documentation:

Returns

-------

p : ndarray, shape (M,) or (M, K)

Polynomial coefficients, highest power first.

If `y` was 2-D, the coefficients for `k`-th data set are in ``p[:,k]``.

residuals, rank, singular_values, rcond : present only if `full` = True

Residuals of the least-squares fit, the effective rank of the scaled

Vandermonde coefficient matrix, its singular values, and the specified

value of `rcond`. For more details, see `linalg.lstsq`.

Which means that if you can do a fit and get the residuals as:

import numpy as np

x = np.arange(10)

y = x**2 -3*x + np.random.random(10)

p, res, _, _, _ = numpy.polyfit(x, y, deg, full=True)

Then, the p are your fit parameters, and the res will be the residuals, as described above. The _'s are because you don't need to save the last three parameters, so you can just save them in the variable _ which you won't use. This is a convention and is not required.

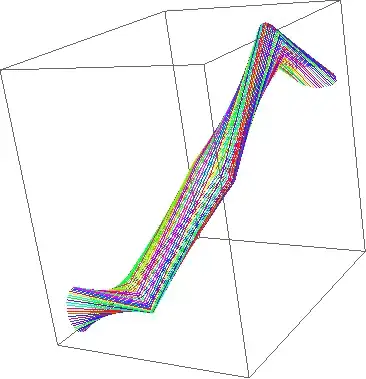

@Jaime's answer explains what the residual means. Another thing you can do is look at those squared deviations as a function (the sum of which is res). This is particularly helpful to see a trend that didn't fit sufficiently. res can be large because of statistical noise, or possibly systematic poor fitting, for example:

x = np.arange(100)

y = 1000*np.sqrt(x) + x**2 - 10*x + 500*np.random.random(100) - 250

p = np.polyfit(x,y,2) # insufficient degree to include sqrt

yfit = np.polyval(p,x)

figure()

plot(x,y, label='data')

plot(x,yfit, label='fit')

plot(x,yfit-y, label='var')

So in the figure, note the bad fit near x = 0:

- 45,161

- 17

- 118

- 134