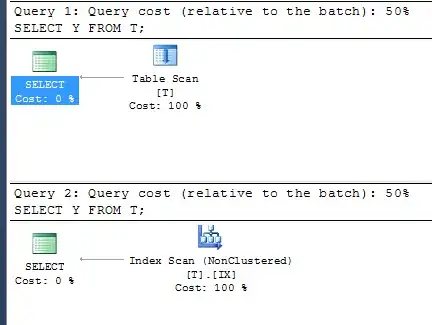

I want to down sample an input texture from 800x600 to one quarter itself (200x150 pixel). But if I do that I can see only a little bit of the image. It seems that the frament shader doesn't down sample the whole texture. The following example is to create an depth-of-field effect.

Vertex shader:

#version 330

uniform UVertFrameBuffer

{

mat4 m_ScreenMatrix;

};

uniform UDofInvertedScreenSize

{

vec2 m_InvertedScreenSize;

};

// -----------------------------------------------------------------------------

// in variables

// -----------------------------------------------------------------------------

layout(location = 0) in vec3 VertexPosition;

// -----------------------------------------------------------------------------

// out variables

// -----------------------------------------------------------------------------

struct SPixelCoords

{

vec2 tcColor0;

vec2 tcColor1;

};

out SPixelCoords vs_PixelCoords;

// -----------------------------------------------------------------------------

// Program

// -----------------------------------------------------------------------------

void main()

{

vec4 Position = vec4(VertexPosition.xy, 0.0f, 1.0f);

vec4 PositionInClipSpace = m_ScreenMatrix * Position;

vec2 ScreenCoords = VertexPosition.xy;

// -----------------------------------------------------------------------------

vs_PixelCoords.tcColor0 = ScreenCoords + vec2(-1.0f, -1.0f) * m_InvertedScreenSize;

vs_PixelCoords.tcColor1 = ScreenCoords + vec2(+1.0f, -1.0f) * m_InvertedScreenSize;

// -----------------------------------------------------------------------------

gl_Position = PositionInClipSpace;

}

Fragment shader:

#version 330

uniform sampler2D g_ColorTex;

uniform sampler2D g_DepthTex;

uniform UDofDownBuffer

{

vec2 m_DofNear;

vec2 m_DofRowDelta;

};

// -----------------------------------------------------------------------------

// Inputs per vertice

// -----------------------------------------------------------------------------

struct SPixelCoords

{

vec2 tcColor0;

vec2 tcColor1;

};

in SPixelCoords vs_PixelCoords;

// -----------------------------------------------------------------------------

// Output to graphic card

// -----------------------------------------------------------------------------

layout (location = 0) out vec4 FragColor;

// -----------------------------------------------------------------------------

// Program

// -----------------------------------------------------------------------------

void main()

{

// Initialize variables

vec3 Color;

float MaxCoc;

vec4 Depth;

vec4 CurCoc;

vec4 Coc;

vec2 RowOfs[4];

// Calculate row offset

RowOfs[0] = vec2(0.0f);

RowOfs[1] = m_DofRowDelta.xy;

RowOfs[2] = m_DofRowDelta.xy * 2.0f;

RowOfs[3] = m_DofRowDelta.xy * 3.0f;

// Bilinear filtering to average 4 color samples

Color = vec3(0.0f);

Color += texture(g_ColorTex, vs_PixelCoords.tcColor0.xy + RowOfs[0]).rgb;

Color += texture(g_ColorTex, vs_PixelCoords.tcColor1.xy + RowOfs[0]).rgb;

Color += texture(g_ColorTex, vs_PixelCoords.tcColor0.xy + RowOfs[2]).rgb;

Color += texture(g_ColorTex, vs_PixelCoords.tcColor1.xy + RowOfs[2]).rgb;

Color /= 4.0f;

// Calculate CoC

...

// Calculate fragment color

FragColor = vec4(Color, MaxCoc);

}

The input texture is 800x600 and the output texture is 200x150 pixel. As m_InvertedScreenSize I use 1/800 and 1/600 pixel. Is that right?

I upload two triangles which represent screen coordinates of OpenGL.

QuadVertices[][1] = {

{ 0.0f, 1.0f, 0.0f, },

{ 1.0f, 1.0f, 0.0f, },

{ 1.0f, 0.0f, 0.0f, },

{ 0.0f, 0.0f, 0.0f, }, };

QuadIndices[][2] = {

{ 0, 1, 2, },

{ 0, 2, 3, }, };

My screen matrix translate these vertices into clipping space via a orthogonal matrix.

Position(0.0f, 0.0f, 1.0f);

Target(0.0f, 0.0f, 0.0f);

Up(0.0f, 1.0f, 0.0f);

LookAt(Position, Target, Up);

SetOrthographic(0.0f, 1.0f, 0.0f, 1.0f, -1.0f, 1.0f);

The following images shows the input texture and the result. First one is the original image without down sampling. Second is the actual down sampled texture. And the third one is a down sampled texture with calculating ScreenCoords *= 4.0f;.