I am modifying CUDA Video Encoder (NVCUVENC) encoding sample found in SDK samples pack so that the data comes not from external yuv files (as is done in the sample ) but from cudaArray which is filled from texture.

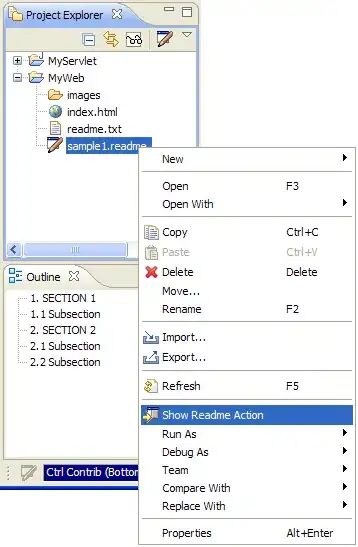

So the key API method that encodes the frame is:

int NVENCAPI NVEncodeFrame(NVEncoder hNVEncoder, NVVE_EncodeFrameParams *pFrmIn, unsigned long flag, void *pData);

If I get it right the param :

CUdeviceptr dptr_VideoFrame

is supposed to pass the data to encode.But I really haven't understood how to connect it with some texture data on GPU.The sample source code is very vague about it as it works with CPU yuv files input.

For example in main.cpp , lines 555 -560 there is following block:

// If dptrVideoFrame is NULL, then we assume that frames come from system memory, otherwise it comes from GPU memory

// VideoEncoder.cpp, EncodeFrame() will automatically copy it to GPU Device memory, if GPU device input is specified

if (pCudaEncoder->EncodeFrame(efparams, dptrVideoFrame, cuCtxLock) == false)

{

printf("\nEncodeFrame() failed to encode frame\n");

}

So ,from the comment, it seems like dptrVideoFrame should be filled with yuv data coming from device to encode the frame.But there is no place where it is explained how to do so.

UPDATE:

I would like to share some findings.First , I managed to encode data from Frame Buffer texture.The problem now is that the output video is a mess.

That is the desired result:

Here is what I do :

On OpenGL side I have 2 custom FBOs-first gets the scene rendered normally into it .Then the texture from the first FBO is used to render screen quad into second FBO doing RGB -> YUV conversion in the fragment shader.

The texture attached to second FBO is mapped then to CUDA resource. Then I encode the current texture like this:

void CUDAEncoder::Encode(){

NVVE_EncodeFrameParams efparams;

efparams.Height = sEncoderParams.iOutputSize[1];

efparams.Width = sEncoderParams.iOutputSize[0];

efparams.Pitch = (sEncoderParams.nDeviceMemPitch ? sEncoderParams.nDeviceMemPitch : sEncoderParams.iOutputSize[0]);

efparams.PictureStruc = (NVVE_PicStruct)sEncoderParams.iPictureType;

efparams.SurfFmt = (NVVE_SurfaceFormat)sEncoderParams.iSurfaceFormat;

efparams.progressiveFrame = (sEncoderParams.iSurfaceFormat == 3) ? 1 : 0;

efparams.repeatFirstField = 0;

efparams.topfieldfirst = (sEncoderParams.iSurfaceFormat == 1) ? 1 : 0;

if(_curFrame > _framesTotal){

efparams.bLast=1;

}else{

efparams.bLast=0;

}

//----------- get cuda array from the texture resource -------------//

checkCudaErrorsDrv(cuGraphicsMapResources(1,&_cutexResource,NULL));

checkCudaErrorsDrv(cuGraphicsSubResourceGetMappedArray(&_cutexArray,_cutexResource,0,0));

/////////// copy data into dptrvideo frame //////////

// LUMA based on CUDA SDK sample//////////////

CUDA_MEMCPY2D pcopy;

memset((void *)&pcopy, 0, sizeof(pcopy));

pcopy.srcXInBytes = 0;

pcopy.srcY = 0;

pcopy.srcHost= NULL;

pcopy.srcDevice= 0;

pcopy.srcPitch =efparams.Width;

pcopy.srcArray= _cutexArray;///SOME DEVICE ARRAY!!!!!!!!!!!!! <--------- to figure out how to fill this.

/// destination //////

pcopy.dstXInBytes = 0;

pcopy.dstY = 0;

pcopy.dstHost = 0;

pcopy.dstArray = 0;

pcopy.dstDevice=dptrVideoFrame;

pcopy.dstPitch = sEncoderParams.nDeviceMemPitch;

pcopy.WidthInBytes = sEncoderParams.iInputSize[0];

pcopy.Height = sEncoderParams.iInputSize[1];

pcopy.srcMemoryType=CU_MEMORYTYPE_ARRAY;

pcopy.dstMemoryType=CU_MEMORYTYPE_DEVICE;

// CHROMA based on CUDA SDK sample/////

CUDA_MEMCPY2D pcChroma;

memset((void *)&pcChroma, 0, sizeof(pcChroma));

pcChroma.srcXInBytes = 0;

pcChroma.srcY = 0;// if I uncomment this line I get error from cuda for incorrect value.It does work in CUDA SDK original sample SAMPLE//sEncoderParams.iInputSize[1] << 1; // U/V chroma offset

pcChroma.srcHost = NULL;

pcChroma.srcDevice = 0;

pcChroma.srcArray = _cutexArray;

pcChroma.srcPitch = efparams.Width >> 1; // chroma is subsampled by 2 (but it has U/V are next to each other)

pcChroma.dstXInBytes = 0;

pcChroma.dstY = sEncoderParams.iInputSize[1] << 1; // chroma offset (srcY*srcPitch now points to the chroma planes)

pcChroma.dstHost = 0;

pcChroma.dstDevice = dptrVideoFrame;

pcChroma.dstArray = 0;

pcChroma.dstPitch = sEncoderParams.nDeviceMemPitch >> 1;

pcChroma.WidthInBytes = sEncoderParams.iInputSize[0] >> 1;

pcChroma.Height = sEncoderParams.iInputSize[1]; // U/V are sent together

pcChroma.srcMemoryType = CU_MEMORYTYPE_ARRAY;

pcChroma.dstMemoryType = CU_MEMORYTYPE_DEVICE;

checkCudaErrorsDrv(cuvidCtxLock(cuCtxLock, 0));

checkCudaErrorsDrv( cuMemcpy2D(&pcopy));

checkCudaErrorsDrv( cuMemcpy2D(&pcChroma));

checkCudaErrorsDrv(cuvidCtxUnlock(cuCtxLock, 0));

//=============================================

// If dptrVideoFrame is NULL, then we assume that frames come from system memory, otherwise it comes from GPU memory

// VideoEncoder.cpp, EncodeFrame() will automatically copy it to GPU Device memory, if GPU device input is specified

if (_encoder->EncodeFrame(efparams, dptrVideoFrame, cuCtxLock) == false)

{

printf("\nEncodeFrame() failed to encode frame\n");

}

checkCudaErrorsDrv(cuGraphicsUnmapResources(1, &_cutexResource, NULL));

// computeFPS();

if(_curFrame > _framesTotal){

_encoder->Stop();

exit(0);

}

_curFrame++;

}

I set Encoder params from the .cfg files included with CUDA SDK Encoder sample.So here I use 704x480-h264.cfg setup .I tried all of them and getting always similarly ugly result.

I suspect the problem is somewhere in CUDA_MEMCPY2D for luma and chroma objects params setup .May be wrong pitch , width ,height dimensions.I set the viewport the same size as the video (704,480) and compared params to those used in CUDA SDK sample but got no clue where the problem is. Anyone ?