I'm using the function solvePnP to estimate the pose of my robot by visual markers. Some times I get wrong results in two consecutives frames. In the file problem.cpp you can see one of these results.

The points sets correspond to the same marker in two consecutives frames. The variation between them is very small, and the result of the solvePnP is very different, but only in the rotation vector. Translation vector is ok.

This happens approximely one time each 30 frames. I have tested CV_ITERATIVE and CV_P3P method with the same data, and they return the same result.

This is a example of the issue:

#include <opencv2/calib3d/calib3d.hpp>

#include <opencv2/core/core.hpp>

#include <iostream>

using namespace std;

using namespace cv;

int main(){

vector<Point2f> points1, points2;

//First point's set

points1.push_back(Point2f(384.3331f, 162.23618f));

points1.push_back(Point2f(385.27521f, 135.21503f));

points1.push_back(Point2f(409.36746f, 139.30315f));

points1.push_back(Point2f(407.43854f, 165.64435f));

//Second point's set

points2.push_back(Point2f(427.64938f, 158.77661f));

points2.push_back(Point2f(428.79471f, 131.60953f));

points2.push_back(Point2f(454.04532f, 134.97353f));

points2.push_back(Point2f(452.23096f, 162.13156f));

//Real object point's set

vector<Point3f> object;

object.push_back(Point3f(-88.0f,88.0f,0));

object.push_back(Point3f(-88.0f,-88.0f,0));

object.push_back(Point3f(88.0f,-88.0f,0));

object.push_back(Point3f(88.0f,88.0f,0));

//Camera matrix

Mat cam_matrix = Mat(3,3,CV_32FC1,Scalar::all(0));

cam_matrix.at<float>(0,0) = 519.0f;

cam_matrix.at<float>(0,2) = 320.0f;

cam_matrix.at<float>(1,1) = 522.0f;

cam_matrix.at<float>(1,2) = 240.0f;

cam_matrix.at<float>(2,2) = 1.0f;

//PnP

Mat rvec1i,rvec2i,tvec1i,tvec2i;

Mat rvec1p,rvec2p,tvec1p,tvec2p;

solvePnP(Mat(object),Mat(points1),cam_matrix,Mat(),rvec1i,tvec1i,false,CV_ITERATIVE);

solvePnP(Mat(object),Mat(points2),cam_matrix,Mat(),rvec2i,tvec2i,false,CV_ITERATIVE);

solvePnP(Mat(object),Mat(points1),cam_matrix,Mat(),rvec1p,tvec1p,false,CV_P3P);

solvePnP(Mat(object),Mat(points2),cam_matrix,Mat(),rvec2p,tvec2p,false,CV_P3P);

//Print result

cout<<"Iterative: "<<endl;

cout<<" rvec1 "<<endl<<" "<<rvec1i<<endl<<endl;

cout<<" rvec2 "<<endl<<" "<<rvec2i<<endl<<endl;

cout<<" tvec1 "<<endl<<" "<<tvec1i<<endl<<endl;

cout<<" tvec1 "<<endl<<" "<<tvec2i<<endl<<endl;

cout<<"P3P: "<<endl;

cout<<" rvec1 "<<endl<<" "<<rvec1p<<endl<<endl;

cout<<" rvec2 "<<endl<<" "<<rvec2p<<endl<<endl;

cout<<" tvec1 "<<endl<<" "<<tvec1p<<endl<<endl;

cout<<" tvec1 "<<endl<<" "<<tvec2p<<endl<<endl;

return 0;

}

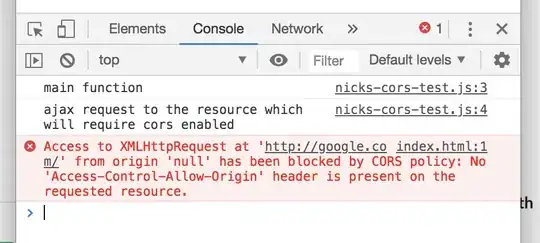

And this is the result:

Iterative:

rvec1

[-0.04097605099283788; -0.3679435501353919; 0.07086072250132323]

rvec2

[0.4135950235376482; 0.6834759799439329; 0.1049879790744613]

tvec1

[502.4723979671957; -582.21069174737; 3399.430492848247]

tvec2

[774.9623278021523; -594.8332356366083; 3338.42153723169]

P3P:

rvec1

[-0.08738607323881876; -0.363959462471951; 0.06617591006606272]

rvec2

[0.4239629869157338; 0.7210136877984544; 0.1133539043199323]

tvec1

[497.3941378807597; -574.3015171812298; 3354.522829883918]

tvec1

[760.2641421675842; -582.2718972605966; 3275.390313948845]

Thanks.