I know this is an old post, but I like to answer these things as best I can when I have the opportunity, just so the next guy has better help than I had....

In my own crusade to implement the same blending in a different environment, I came across an issue with the naive math that's offered in a lot of suggestions. Unless you're using the LCH space for other image operations, it may not be worth the cost of conversion just for this. You're right; there's lots of math involved in the conversion and if you're going to convert right back to RGB, you're going to need to handle the out-of-gamut points gracefully. As far as I know, this means doing data truncation prior to conversion back to RGB. Truncating the negative and supramaximal pixel values after conversion will wash out black and white regions of the image. The problem is that truncating prior to conversion means trying to calculate the maximum chroma for a given H and L. You can precalculate and use a LUT, but it's not that much faster.

If you want a color model which offers good (better than HSL) color/brightness separation and behaves reasonably similarly (for noncritical purposes), I suggest using YPbPr instead. It's a single affine transformation, so it'll be much faster, and the bounding calculations are simple, as the faces of the RGB gamut remain planar. The bounding calculations are just line-plane intersection maths (and even these can be rolled into a LUT if you want more speed. Again, it's not as good as a LAB or LUV blend; if the foreground is highly saturated, you may notice as much, but if speed and simplicity is important it might be worth considering.

Something that might be worth researching as an example is HuSL (www.husl-colors.org). HuSL is a version of CIELUV wherein the chroma space is normalized to the aforementioned boundary of the RGB gamut. The WMaxima worksheet available there should give you some ideas as to how to calculate the maximal chroma for truncation. While using HuSL for color blends works better than HSL, the normalization distorts the representation of chroma (S), so the uniformity of LUV (the reason we went through all those fractional exponentiations) is lost. The implementations here are also based on CIELUV, not CIELAB, though the consequences of that probably don't matter much unless you're doing image editing.

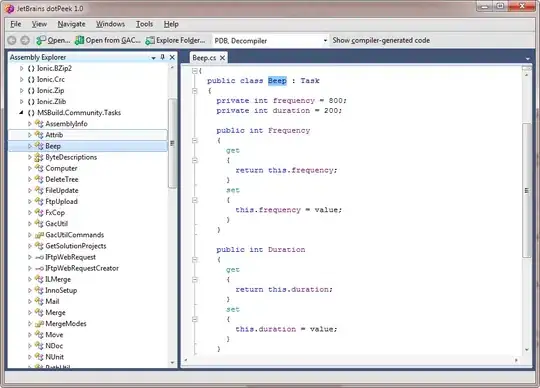

Similarly, if examples are of any help, I have an image blending function on the Mathworks FEX which offers these blends, as well as the HuSL and bound LCHab/uv conversion tools (as well as the max chroma LUT's for LCHab and LCHuv)

It's a matlab script, but the mathematical concept is the same.

If you don't want to deal with bounding chroma calculations at all, you might consider doing this:

- Extract the luma from the image. Just do a weighted average using

whatever definition of luma you want. [0.299 0.587 0.114]*[R G B]'

is Rec.601 iirc.

- Do a HS channel swap in HSL (GIMP uses HSL not HSV, not HSI).

Convert back to RGB

- Convert to your favorite luma-chroma model (e.g. YPbPr) and replace

Y' with the original luma. Convert back to RGB.

This is basically the programmatic approach to a common luma preservation technique using layers in an image editor. This produces surprisingly good results and uses common conversion tools.

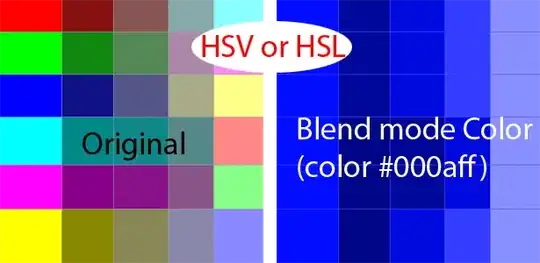

Finally, I offer my own discussion of this with example blends using various color models: HSV, HSI, HSL, CIELAB (with truncations before and after conversion), and the HSL+Y method. Also: if you're as thick as I am, having a visualization of the geometry of the projected RGB space might help lend a better understanding of what goes on when you swap chroma/lightness information between points in LCH (and the necessity of managing OOG color points)

I'd post links to more info, but I guess they don't allow nobodies like me to do that.

Edit:

Actually, I just realized something. If speed and uniformity are important, and a slightly limited chroma range is acceptable (maybe for UI graphics or something), an addendum to my YPbPr recommendation may be in order. Consider the extent of the RGB gamut in YPbPr: a skewed cube standing on its corner about the neutral axis. The maximal rotationally-symmetric subset of this volume is a bicone. Truncating to this bicone instead of to the cube faces is much faster than calculating intersections with the cube faces. The blend is more uniform, but ultimately has a limited maximum chroma. There are HuSL methods that operate on the same principle (HuSLp), but doing this in YPbPr allows access to a greater fraction of the RGB space than HuSLp does. There's actually a post about doing this on my blog (with examples comparing it to LAB blending), though at the time, I was more concerned with uniformity. The speed of the cone intersection calculation didn't cross my mind until now.