If you're looking for Lanczos resampling, the following is the shader program I use in my open source GPUImage library:

Vertex shader:

attribute vec4 position;

attribute vec2 inputTextureCoordinate;

uniform float texelWidthOffset;

uniform float texelHeightOffset;

varying vec2 centerTextureCoordinate;

varying vec2 oneStepLeftTextureCoordinate;

varying vec2 twoStepsLeftTextureCoordinate;

varying vec2 threeStepsLeftTextureCoordinate;

varying vec2 fourStepsLeftTextureCoordinate;

varying vec2 oneStepRightTextureCoordinate;

varying vec2 twoStepsRightTextureCoordinate;

varying vec2 threeStepsRightTextureCoordinate;

varying vec2 fourStepsRightTextureCoordinate;

void main()

{

gl_Position = position;

vec2 firstOffset = vec2(texelWidthOffset, texelHeightOffset);

vec2 secondOffset = vec2(2.0 * texelWidthOffset, 2.0 * texelHeightOffset);

vec2 thirdOffset = vec2(3.0 * texelWidthOffset, 3.0 * texelHeightOffset);

vec2 fourthOffset = vec2(4.0 * texelWidthOffset, 4.0 * texelHeightOffset);

centerTextureCoordinate = inputTextureCoordinate;

oneStepLeftTextureCoordinate = inputTextureCoordinate - firstOffset;

twoStepsLeftTextureCoordinate = inputTextureCoordinate - secondOffset;

threeStepsLeftTextureCoordinate = inputTextureCoordinate - thirdOffset;

fourStepsLeftTextureCoordinate = inputTextureCoordinate - fourthOffset;

oneStepRightTextureCoordinate = inputTextureCoordinate + firstOffset;

twoStepsRightTextureCoordinate = inputTextureCoordinate + secondOffset;

threeStepsRightTextureCoordinate = inputTextureCoordinate + thirdOffset;

fourStepsRightTextureCoordinate = inputTextureCoordinate + fourthOffset;

}

Fragment shader:

precision highp float;

uniform sampler2D inputImageTexture;

varying vec2 centerTextureCoordinate;

varying vec2 oneStepLeftTextureCoordinate;

varying vec2 twoStepsLeftTextureCoordinate;

varying vec2 threeStepsLeftTextureCoordinate;

varying vec2 fourStepsLeftTextureCoordinate;

varying vec2 oneStepRightTextureCoordinate;

varying vec2 twoStepsRightTextureCoordinate;

varying vec2 threeStepsRightTextureCoordinate;

varying vec2 fourStepsRightTextureCoordinate;

// sinc(x) * sinc(x/a) = (a * sin(pi * x) * sin(pi * x / a)) / (pi^2 * x^2)

// Assuming a Lanczos constant of 2.0, and scaling values to max out at x = +/- 1.5

void main()

{

lowp vec4 fragmentColor = texture2D(inputImageTexture, centerTextureCoordinate) * 0.38026;

fragmentColor += texture2D(inputImageTexture, oneStepLeftTextureCoordinate) * 0.27667;

fragmentColor += texture2D(inputImageTexture, oneStepRightTextureCoordinate) * 0.27667;

fragmentColor += texture2D(inputImageTexture, twoStepsLeftTextureCoordinate) * 0.08074;

fragmentColor += texture2D(inputImageTexture, twoStepsRightTextureCoordinate) * 0.08074;

fragmentColor += texture2D(inputImageTexture, threeStepsLeftTextureCoordinate) * -0.02612;

fragmentColor += texture2D(inputImageTexture, threeStepsRightTextureCoordinate) * -0.02612;

fragmentColor += texture2D(inputImageTexture, fourStepsLeftTextureCoordinate) * -0.02143;

fragmentColor += texture2D(inputImageTexture, fourStepsRightTextureCoordinate) * -0.02143;

gl_FragColor = fragmentColor;

}

This is applied in two passes, with the first performing a horizontal downsampling and the second a vertical downsampling. The texelWidthOffset and texelHeightOffset uniforms are alternately set to 0.0 and the width fraction or height fraction of a single pixel in the image.

I hard-calculate the texel offsets in the vertex shader because this avoids dependent texture reads on the mobile devices I'm targeting with this, leading to significantly better performance there. It is a little verbose, though.

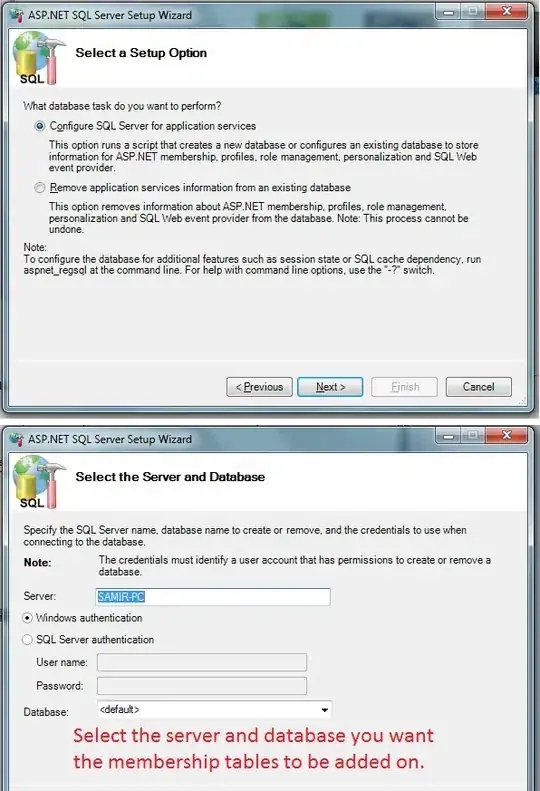

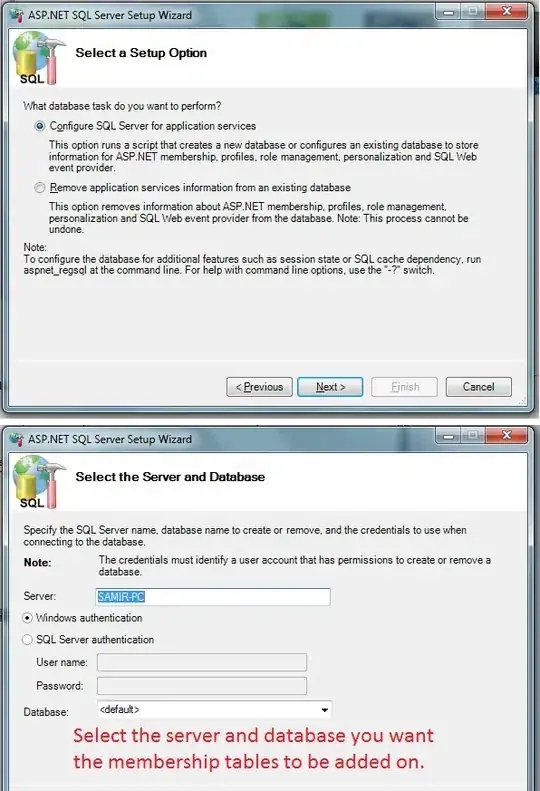

Results from this Lanczos resampling:

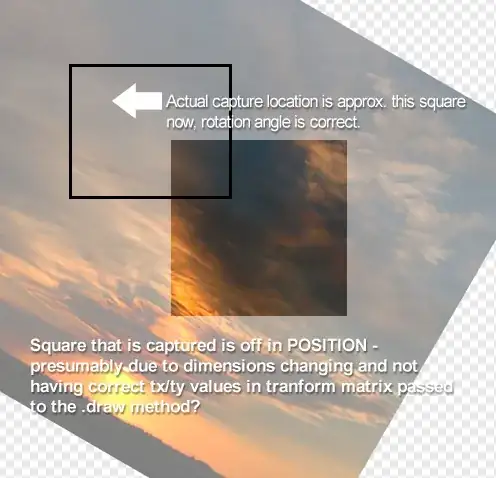

Normal bilinear downsampling:

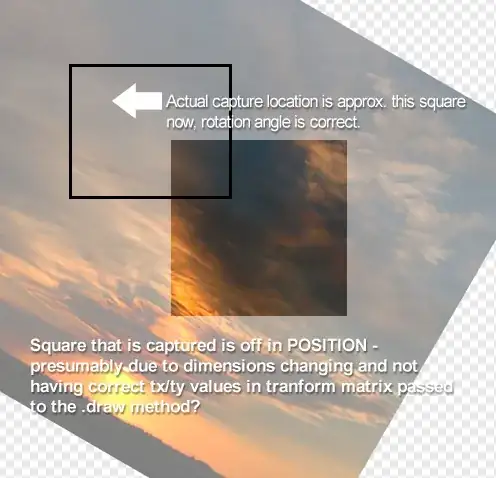

Nearest-neighbor downsampling: