Following is a code I have editted from the book "cuda by example" for a testing of the CUDA concurrently kernel execution.

static void HandleError( cudaError_t err,

const char *file,

int line ) {

if (err != cudaSuccess) {

printf( "%s in %s at line %d\n", cudaGetErrorString( err ),

file, line );

exit( EXIT_FAILURE );

}

}

#define HANDLE_ERROR( err ) (HandleError( err, __FILE__, __LINE__ ))

#define N (1024*1024*10)

#define FULL_DATA_SIZE (N*2)

__global__ void kernel( int *a, int *b, int *c ) {

int idx = threadIdx.x + blockIdx.x * blockDim.x;

if (idx < N) {

int idx1 = (idx + 1) % 256;

int idx2 = (idx + 2) % 256;

float as = (a[idx] + a[idx1] + a[idx2]) / 3.0f;

float bs = (b[idx] + b[idx1] + b[idx2]) / 3.0f;

c[idx] = (as + bs) / 2;

}

}

int main( void ) {

cudaDeviceProp prop;

int whichDevice;

HANDLE_ERROR( cudaGetDevice( &whichDevice ) );

HANDLE_ERROR( cudaGetDeviceProperties( &prop, whichDevice ) );

if (!prop.deviceOverlap) {

printf( "Device will not handle overlaps, so no speed up from streams\n" );

return 0;

}

cudaEvent_t start, stop;

float elapsedTime;

cudaStream_t stream0, stream1;

int *host_a, *host_b, *host_c;

int *dev_a0, *dev_b0, *dev_c0;

int *dev_a1, *dev_b1, *dev_c1;

// start the timers

HANDLE_ERROR( cudaEventCreate( &start ) );

HANDLE_ERROR( cudaEventCreate( &stop ) );

// initialize the streams

HANDLE_ERROR( cudaStreamCreate( &stream0 ) );

HANDLE_ERROR( cudaStreamCreate( &stream1 ) );

// allocate the memory on the GPU

HANDLE_ERROR( cudaMalloc( (void**)&dev_a0,

N * sizeof(int) ) );

HANDLE_ERROR( cudaMalloc( (void**)&dev_b0,

N * sizeof(int) ) );

HANDLE_ERROR( cudaMalloc( (void**)&dev_c0,

N * sizeof(int) ) );

HANDLE_ERROR( cudaMalloc( (void**)&dev_a1,

N * sizeof(int) ) );

HANDLE_ERROR( cudaMalloc( (void**)&dev_b1,

N * sizeof(int) ) );

HANDLE_ERROR( cudaMalloc( (void**)&dev_c1,

N * sizeof(int) ) );

// allocate host locked memory, used to stream

HANDLE_ERROR( cudaHostAlloc( (void**)&host_a,

FULL_DATA_SIZE * sizeof(int),

cudaHostAllocDefault ) );

HANDLE_ERROR( cudaHostAlloc( (void**)&host_b,

FULL_DATA_SIZE * sizeof(int),

cudaHostAllocDefault ) );

HANDLE_ERROR( cudaHostAlloc( (void**)&host_c,

FULL_DATA_SIZE * sizeof(int),

cudaHostAllocDefault ) );

for (int i=0; i<FULL_DATA_SIZE; i++) {

host_a[i] = rand();

host_b[i] = rand();

}

HANDLE_ERROR( cudaEventRecord( start, 0 ) );

// now loop over full data, in bite-sized chunks

for (int i=0; i<FULL_DATA_SIZE; i+= N*2) {

// enqueue kernels in stream0 and stream1

kernel<<<N/256,256,0,stream0>>>( dev_a0, dev_b0, dev_c0 );

kernel<<<N/256,256,0,stream1>>>( dev_a1, dev_b1, dev_c1 );

}

HANDLE_ERROR( cudaStreamSynchronize( stream0 ) );

HANDLE_ERROR( cudaStreamSynchronize( stream1 ) );

HANDLE_ERROR( cudaEventRecord( stop, 0 ) );

HANDLE_ERROR( cudaEventSynchronize( stop ) );

HANDLE_ERROR( cudaEventElapsedTime( &elapsedTime,

start, stop ) );

printf( "Time taken: %3.1f ms\n", elapsedTime );

// cleanup the streams and memory

HANDLE_ERROR( cudaFreeHost( host_a ) );

HANDLE_ERROR( cudaFreeHost( host_b ) );

HANDLE_ERROR( cudaFreeHost( host_c ) );

HANDLE_ERROR( cudaFree( dev_a0 ) );

HANDLE_ERROR( cudaFree( dev_b0 ) );

HANDLE_ERROR( cudaFree( dev_c0 ) );

HANDLE_ERROR( cudaFree( dev_a1 ) );

HANDLE_ERROR( cudaFree( dev_b1 ) );

HANDLE_ERROR( cudaFree( dev_c1 ) );

HANDLE_ERROR( cudaStreamDestroy( stream0 ) );

HANDLE_ERROR( cudaStreamDestroy( stream1 ) );

return 0;

}

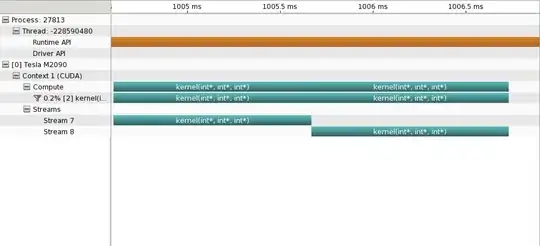

First I did a CUDA profiling using nvvp, and found the two kernels are not overlapping at all:

Some previous posts on SO stated that the profiler may disable the concurrent kernel execution, so I did a plain running. The total time in the kernel loop was reported as 2.2ms, but he profiler reported the execution time of each kernel as 1.1ms. This still implies no (or very poor) overlap between the two kernels.

Some previous posts on SO stated that the profiler may disable the concurrent kernel execution, so I did a plain running. The total time in the kernel loop was reported as 2.2ms, but he profiler reported the execution time of each kernel as 1.1ms. This still implies no (or very poor) overlap between the two kernels.

I am using CUDA4.0 on Tesla M2090. It seems that the kernel resource requirement (~10s of MB) should be small on this device (6G), and concurrent execution should be practical. Not sure where is the problem. Should I do something special to enable concurrent kernels (some APIs, some environment setup...)?