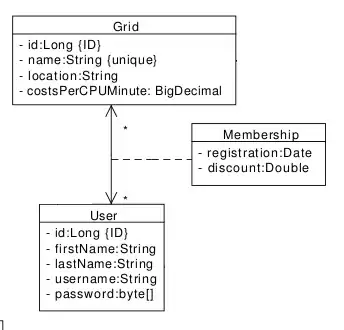

I am building a ray Tracer from scratch. My question is: When I change camera coordinates the Sphere changes to ellipse. I don't understand why it's happening.

Here are some images to show the artifacts:

Sphere: 1 1 -1 1.0 (Center, radius)

Camera: 0 0 5 0 0 0 0 1 0 45.0 1.0 (eyepos, lookat, up, foy, aspect)

But when I changed camera coordinate, the sphere looks distorted as shown below:

Camera: -2 -2 2 0 0 0 0 1 0 45.0 1.0

I don't understand what is wrong. If someone can help that would be great!

I set my imagePlane as follows:

//Computing u,v,w axes coordinates of Camera as follows:

{

Vector a = Normalize(eye - lookat); //Camera_eye - Camera_lookAt

Vector b = up; //Camera Up Vector

m_w = a;

m_u = b.cross(m_w);

m_u.normalize();

m_v = m_w.cross(m_u);

}

After that I compute directions for each pixel from the Camera position (eye) as mentioned below:

//Then Computing direction as follows:

int half_w = m_width * 0.5;

int half_h = m_height * 0.5;

double half_fy = fovy() * 0.5;

double angle = tan( ( M_PI * half_fy) / (double)180.0 );

for(int k=0; k<pixels.size(); k++){

double j = pixels[k].x(); //width

double i = pixels[k].y(); //height

double XX = aspect() * angle * ( (j - half_w ) / (double)half_w );

double YY = angle * ( (half_h - i ) / (double)half_h );

Vector dir = (m_u * XX + m_v * YY) - m_w ;

directions.push_back(dir);

}

After that:

for each dir:

Ray ray(eye, dir);

int depth = 0;

t_color += Trace(g_primitive, ray, depth);