So we have had a heated debate at work as to which DataAccess route to take: DataTable or DataReader.

DISCLAIMER I am on the DataReader side and these results have shaken my world.

We ended up writing some benchmarks to test the speed differences. It was generally agreed that a DataReader is faster, but we wanted to see how much faster.

The results surprised us. The DataTable was consistently faster than the DataReader. Approaching twice as fast sometimes.

So I turn to you, members of SO. Why, when most of the documentation and even Microsoft, state that a DataReader is faster are our test showing otherwise.

And now for the code:

The test harness:

private void button1_Click(object sender, EventArgs e)

{

System.Diagnostics.Stopwatch sw = new System.Diagnostics.Stopwatch();

sw.Start();

DateTime date = DateTime.Parse("01/01/1900");

for (int i = 1; i < 1000; i++)

{

using (DataTable aDataTable = ArtifactBusinessModel.BusinessLogic.ArtifactBL.RetrieveDTModified(date))

{

}

}

sw.Stop();

long dataTableTotalSeconds = sw.ElapsedMilliseconds;

sw.Restart();

for (int i = 1; i < 1000; i++)

{

List<ArtifactBusinessModel.Entities.ArtifactString> aList = ArtifactBusinessModel.BusinessLogic.ArtifactBL.RetrieveModified(date);

}

sw.Stop();

long listTotalSeconds = sw.ElapsedMilliseconds;

MessageBox.Show(String.Format("list:{0}, table:{1}", listTotalSeconds, dataTableTotalSeconds));

}

This is the DAL for the DataReader:

internal static List<ArtifactString> RetrieveByModifiedDate(DateTime modifiedLast)

{

List<ArtifactString> artifactList = new List<ArtifactString>();

try

{

using (SqlConnection conn = SecuredResource.GetSqlConnection("Artifacts"))

{

using (SqlCommand command = new SqlCommand("[cache].[Artifacts_SEL_ByModifiedDate]", conn))

{

command.CommandType = CommandType.StoredProcedure;

command.Parameters.Add(new SqlParameter("@LastModifiedDate", modifiedLast));

using (SqlDataReader reader = command.ExecuteReader())

{

int formNumberOrdinal = reader.GetOrdinal("FormNumber");

int formOwnerOrdinal = reader.GetOrdinal("FormOwner");

int descriptionOrdinal = reader.GetOrdinal("Description");

int descriptionLongOrdinal = reader.GetOrdinal("DescriptionLong");

int thumbnailURLOrdinal = reader.GetOrdinal("ThumbnailURL");

int onlineSampleURLOrdinal = reader.GetOrdinal("OnlineSampleURL");

int lastModifiedMetaDataOrdinal = reader.GetOrdinal("LastModifiedMetaData");

int lastModifiedArtifactFileOrdinal = reader.GetOrdinal("LastModifiedArtifactFile");

int lastModifiedThumbnailOrdinal = reader.GetOrdinal("LastModifiedThumbnail");

int effectiveDateOrdinal = reader.GetOrdinal("EffectiveDate");

int viewabilityOrdinal = reader.GetOrdinal("Viewability");

int formTypeOrdinal = reader.GetOrdinal("FormType");

int inventoryTypeOrdinal = reader.GetOrdinal("InventoryType");

int createDateOrdinal = reader.GetOrdinal("CreateDate");

while (reader.Read())

{

ArtifactString artifact = new ArtifactString();

ArtifactDAL.Map(formNumberOrdinal, formOwnerOrdinal, descriptionOrdinal, descriptionLongOrdinal, formTypeOrdinal, inventoryTypeOrdinal, createDateOrdinal, thumbnailURLOrdinal, onlineSampleURLOrdinal, lastModifiedMetaDataOrdinal, lastModifiedArtifactFileOrdinal, lastModifiedThumbnailOrdinal, effectiveDateOrdinal, viewabilityOrdinal, reader, artifact);

artifactList.Add(artifact);

}

}

}

}

}

catch (ApplicationException)

{

throw;

}

catch (Exception e)

{

string errMsg = String.Format("Error in ArtifactDAL.RetrieveByModifiedDate. Date: {0}", modifiedLast);

Logging.Log(Severity.Error, errMsg, e);

throw new ApplicationException(errMsg, e);

}

return artifactList;

}

internal static void Map(int? formNumberOrdinal, int? formOwnerOrdinal, int? descriptionOrdinal, int? descriptionLongOrdinal, int? formTypeOrdinal, int? inventoryTypeOrdinal, int? createDateOrdinal,

int? thumbnailURLOrdinal, int? onlineSampleURLOrdinal, int? lastModifiedMetaDataOrdinal, int? lastModifiedArtifactFileOrdinal, int? lastModifiedThumbnailOrdinal,

int? effectiveDateOrdinal, int? viewabilityOrdinal, IDataReader dr, ArtifactString entity)

{

entity.FormNumber = dr[formNumberOrdinal.Value].ToString();

entity.FormOwner = dr[formOwnerOrdinal.Value].ToString();

entity.Description = dr[descriptionOrdinal.Value].ToString();

entity.DescriptionLong = dr[descriptionLongOrdinal.Value].ToString();

entity.FormType = dr[formTypeOrdinal.Value].ToString();

entity.InventoryType = dr[inventoryTypeOrdinal.Value].ToString();

entity.CreateDate = DateTime.Parse(dr[createDateOrdinal.Value].ToString());

entity.ThumbnailURL = dr[thumbnailURLOrdinal.Value].ToString();

entity.OnlineSampleURL = dr[onlineSampleURLOrdinal.Value].ToString();

entity.LastModifiedMetaData = dr[lastModifiedMetaDataOrdinal.Value].ToString();

entity.LastModifiedArtifactFile = dr[lastModifiedArtifactFileOrdinal.Value].ToString();

entity.LastModifiedThumbnail = dr[lastModifiedThumbnailOrdinal.Value].ToString();

entity.EffectiveDate = dr[effectiveDateOrdinal.Value].ToString();

entity.Viewability = dr[viewabilityOrdinal.Value].ToString();

}

This is the DAL for the DataTable:

internal static DataTable RetrieveDTByModifiedDate(DateTime modifiedLast)

{

DataTable dt= new DataTable("Artifacts");

try

{

using (SqlConnection conn = SecuredResource.GetSqlConnection("Artifacts"))

{

using (SqlCommand command = new SqlCommand("[cache].[Artifacts_SEL_ByModifiedDate]", conn))

{

command.CommandType = CommandType.StoredProcedure;

command.Parameters.Add(new SqlParameter("@LastModifiedDate", modifiedLast));

using (SqlDataAdapter da = new SqlDataAdapter(command))

{

da.Fill(dt);

}

}

}

}

catch (ApplicationException)

{

throw;

}

catch (Exception e)

{

string errMsg = String.Format("Error in ArtifactDAL.RetrieveByModifiedDate. Date: {0}", modifiedLast);

Logging.Log(Severity.Error, errMsg, e);

throw new ApplicationException(errMsg, e);

}

return dt;

}

The results:

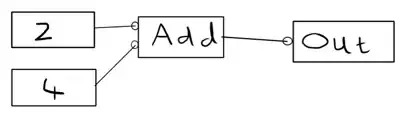

For 10 iterations within the Test Harness

For 1000 iterations within the Test Harness

These results are the second run, to mitigate the differences due to creating the connection.