I stumbled across this question after running into exactly the same problem. The rendering for a layer-backed NSImageView looked horribly scaled, but non-layer-backed one looked great.

I tried a number of different things, but in the end it was actually a suggestion from people at my local CocoaHeads group that ultimately led to a solution.

Here's code that works for me.

CGImageRef imageRef;

NSRect rect;

rect = NSZeroRect;

rect.size = [_imageView bounds].size;

imageRef = [image CGImageForProposedRect:&rect

context:[NSGraphicsContext currentContext]

hints:@{ NSImageHintInterpolation: @(NSImageInterpolationHigh)}];

image = [[[NSImage alloc] initWithCGImage:imageRef size:rect.size] autorelease];

[_imageView setImage:image];

At first, I'd just assumed that the interpolation mode was the problem. But, changing that value does not appear to make a difference. Something about going from NSImage->CGImage->NSImage is what does it. I hate to offer a solution I do not fully understand, but at least it's something.

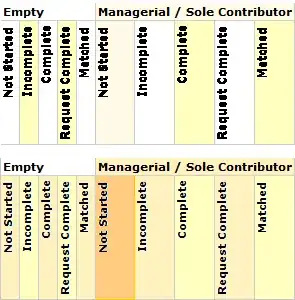

, layer OFF:

, layer OFF: