I am writing a Naive Bayes classifier for performing indoor room localization from WiFi signal strength. So far it is working well, but I have some questions about missing features. This occurs frequently because I use WiFi signals, and WiFi access points are simply not available everywhere.

Question 1: Suppose I have two classes, Apple and Banana, and I want to classify test instance T1 as below.

I fully understand how the Naive Bayes classifier works. Below is the formula I am using from Wikipedia's article on the classifier. I am using uniform prior probabilities P(C=c), so I am omitting it in my implementation.

Now, when I compute the right-hand side of the equation and loop over all the class-conditional feature probabilities, which set of features do I use? Test instance T1 uses features 1, 3, and 4, but the two classes do not have all these features. So when I perform my loop to compute the probability product, I see several choices on what I'm looping over:

- Loop over the union of all features from training, namely features 1, 2, 3, 4. Since the test instance T1 does not have feature 2, then use an artificial tiny probability.

- Loop over only features of the test instance, namely 1, 3, and 4.

- Loop over the features available for each class. To compute class-conditional probability for 'Apple', I would use features 1, 2, and 3, and for 'Banana', I would use 2, 3, and 4.

Which of the above should I use?

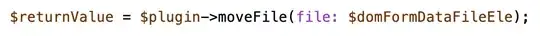

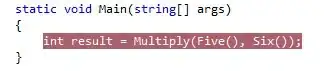

Question 2: Let's say I want to classify test instance T2, where T2 has a feature not found in either class. I am using log probabilities to help eliminate underflow, but I am not sure of the details of the loop. I am doing something like this (in Java-like pseudocode):

Double bestLogProbability = -100000;

ClassLabel bestClassLabel = null;

for (ClassLabel classLabel : allClassLabels)

{

Double logProbabilitySum = 0.0;

for (Feature feature : allFeatures)

{

Double logProbability = getLogProbability(classLabel, feature);

if (logProbability != null)

{

logProbabilitySum += logProbability;

}

}

if (bestLogProbability < logProbability)

{

bestLogProbability = logProbabilitySum;

bestClassLabel = classLabel;

}

}

The problem is that if none of the classes have the test instance's features (feature 5 in the example), then logProbabilitySum will remain 0.0, resulting in a bestLogProbability of 0.0, or linear probability of 1.0, which is clearly wrong. What's a better way to handle this?