I performed a little benchmark, I uploaded 252 rows of data from an Excel into two collections testShortNames and testLongNames as follows:

Long Names:

{

"_id": ObjectId("6007a81ea42c4818e5408e9c"),

"countryNameMaster": "Andorra",

"countryCapitalNameMaster": "Andorra la Vella",

"areaInSquareKilometers": 468,

"countryPopulationNumber": NumberInt("77006"),

"continentAbbreviationCode": "EU",

"currencyNameMaster": "Euro"

}

Short Names:

{

"_id": ObjectId("6007a81fa42c4818e5408e9d"),

"name": "Andorra",

"capital": "Andorra la Vella",

"area": 468,

"pop": NumberInt("77006"),

"continent": "EU",

"currency": "Euro"

}

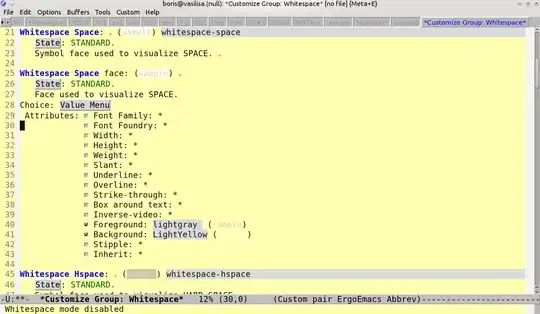

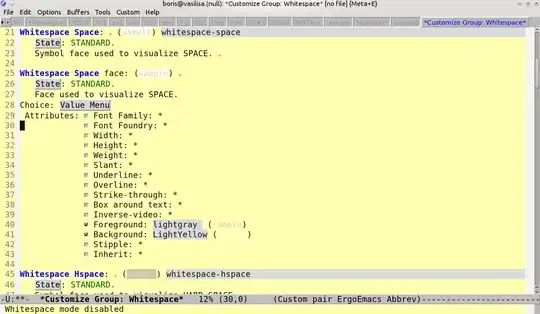

I then got the stats for each, saved in disk files, then did a "diff" on the two files:

pprint.pprint(db.command("collstats", dbCollectionNameLongNames))

The image below shows two variables of interest: size and storageSize.

My reading showed that storageSize is the amount of disk space used after compression, and basically size is the uncompressed size. So we see the storageSize is identical. Apparently the Wired Tiger engine compresses fieldnames quite well.

I then ran a program to retrieve all data from each collection, and checked the response time.

Even though it was a sub-second query, the long names consistently took about 7 times longer. It of course will take longer to send the longer names across from the database server to the client program.

-------LongNames-------

Server Start DateTime=2021-01-20 08:44:38

Server End DateTime=2021-01-20 08:44:39

StartTimeMs= 606964546 EndTimeM= 606965328

ElapsedTime MilliSeconds= 782

-------ShortNames-------

Server Start DateTime=2021-01-20 08:44:39

Server End DateTime=2021-01-20 08:44:39

StartTimeMs= 606965328 EndTimeM= 606965421

ElapsedTime MilliSeconds= 93

In Python, I just did the following (I had to actually loop through the items to force the reads, otherwise the query returns only the cursor):

results = dbCollectionLongNames.find(query)

for result in results:

pass