I'm working on a WaveFront .obj Loader and my first goal is to get all the vertices and indices loaded from the following .obj file (displays a cube with curved edges).

# Blender v2.62 (sub 0) OBJ File: 'Cube.blend'

# www.blender.org

o Cube

v 0.900000 -1.000000 -0.900000

v 0.900000 -1.000000 0.900000

v -0.900000 -1.000000 0.900000

v -0.900000 -1.000000 -0.900000

v 0.900000 1.000000 -0.900000

v 0.899999 1.000000 0.900001

v -0.900000 1.000000 0.900000

v -0.900000 1.000000 -0.900000

v 1.000000 -0.900000 -1.000000

v 1.000000 -0.900000 1.000000

v -1.000000 -0.900000 1.000000

v -1.000000 -0.900000 -1.000000

v 1.000000 0.905000 -0.999999

v 0.999999 0.905000 1.000001

v -1.000000 0.905000 1.000000

v -1.000000 0.905000 -1.000000

f 1//1 2//1 3//1

f 1//1 3//1 4//1

f 13//2 9//2 12//2

f 13//2 12//2 16//2

f 5//3 13//3 16//3

f 5//3 16//3 8//3

f 15//4 7//4 8//4

f 15//5 8//5 16//5

f 11//6 15//6 16//6

f 11//6 16//6 12//6

f 14//7 6//7 7//7

f 14//7 7//7 15//7

f 10//8 14//8 11//8

f 14//8 15//8 11//8

f 13//9 5//9 6//9

f 13//9 6//9 14//9

f 9//10 13//10 10//10

f 13//11 14//11 10//11

f 9//12 1//12 4//12

f 9//12 4//12 12//12

f 3//13 11//13 12//13

f 3//14 12//14 4//14

f 2//15 10//15 11//15

f 2//15 11//15 3//15

f 1//16 9//16 10//16

f 1//16 10//16 2//16

f 5//17 8//17 7//17

f 5//17 7//17 6//17

I've loaded all the vertices and the indices in two vectors called verticesVec and indicesVec and I've checked them both (they have all the input in the correct order, verticesVec[0] = 0.9 and indicesVec[0] = 1). I load the vertices in a VertexBufferObject and the indices in a VerticArrayObject together with the shader fields settings.

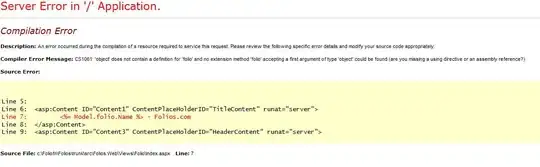

However, when I'm rendering the cube I get the following image which is not correct. I've rechecked all my code but I can't find the part where I'm going wrong. Has it something to do with the vectors and the size in bytes of the vectors?

The following code is from my Draw/Render method that does all the rendering.

glUseProgram(theProgram);

GLuint modelToCameraMatrixUnif = glGetUniformLocation(theProgram, "modelToCameraMatrix");

GLuint perspectiveMatrixUnif = glGetUniformLocation(theProgram, "cameraToClipMatrix");

glUniformMatrix4fv(perspectiveMatrixUnif, 1, GL_FALSE, glm::value_ptr(cameraToClipMatrix));

glm::mat4 identity(1.0f);

glutil::MatrixStack stack(identity); // Load identity Matrix

// Push for Camera Stuff

glutil::PushStack camera(stack);

stack.Translate(glm::vec3(camX, camY, camZ));

//Push one step further to fix object 1

glutil::PushStack push(stack);

stack.Translate(glm::vec3(0.0f, 0.0f, 3.0f));

// Draw Blender object

glBindVertexArray(this->load.vertexArrayOBject);

glUniformMatrix4fv(modelToCameraMatrixUnif, 1, GL_FALSE, glm::value_ptr(stack.Top()));

glDrawElements(GL_TRIANGLES, this->load.indicesVec.size(), GL_UNSIGNED_SHORT, 0);

// Pop camera (call destructor to de-initialize all dynamic memory)

camera.~PushStack();

// Now reset all buffers/programs

glBindVertexArray(0);

glUseProgram(0);

And the initialisation of buffer objects in my .obj loader:

void Loader::generateVertexBufferObjects()

{

// Fils the vertex buffer object with data

glGenBuffers(1, &vertexBufferObject);

glBindBuffer(GL_ARRAY_BUFFER, vertexBufferObject);

glBufferData(GL_ARRAY_BUFFER, verticesVec.size() * 4, &verticesVec[0], GL_STATIC_DRAW);

glBindBuffer(GL_ARRAY_BUFFER, 0);

// Fills the index buffer object with its data

glGenBuffers(1, &indexBufferObject);

glBindBuffer(GL_ELEMENT_ARRAY_BUFFER, indexBufferObject);

glBufferData(GL_ELEMENT_ARRAY_BUFFER, indicesVec.size() * 4, &indicesVec[0], GL_STATIC_DRAW);

glBindBuffer(GL_ELEMENT_ARRAY_BUFFER, 0);

}

void Loader::generateVertexArrayObjects()

{

glGenVertexArrays(1, &vertexArrayOBject);

glBindVertexArray(vertexArrayOBject);

glBindBuffer(GL_ARRAY_BUFFER, vertexBufferObject);

glEnableVertexAttribArray(0);

glVertexAttribPointer(0, 3, GL_FLOAT, GL_FALSE, 0, 0);

glBindBuffer(GL_ELEMENT_ARRAY_BUFFER, indexBufferObject);

glBindVertexArray(0);

}