You don't choose the loss function, you choose the model

The loss function is usually directly determined by the model when you fit your parameters using Maximum Likelihood Estimation (MLE), which is the most popular approach in Machine Learning.

You mentioned the Mean Squared Error as a loss function for linear regression. Then "we change the cost function to be a logarithmic function", referring to the Cross Entropy Loss. We didn't change the cost function. In fact, the Mean Squared Error is the Cross Entropy Loss for linear regression, when we assume yto be normally distributed by a Gaussian, whose mean is defined by Wx + b.

Explanation

With MLE, you choose the parameters in way, that the likelihood of the training data is maximized. The likelihood of the whole training dataset is a product of the likelihoods of each training sample. Because that may underflow to zero, we usually maximize the log-likelihood of the training data / minimize the negative log-likelihood. Thus, the cost function becomes a sum of the negative log-likelihood of each training sample, which is given by:

-log(p(y | x; w))

where w are the parameters of our model (including the bias). Now, for logistic regression, that is the logarithm that you referred to. But what about the claim, that this also corresponds to the MSE for linear regression?

Example

To show the MSE corresponds to the cross-entropy, we assume that y is normally distributed around a mean, which we predict using w^T x + b. We also assume that it has a fixed variance, so we don't predict the variance with our linear regression, only the mean of the Gaussian.

p(y | x; w) = N(y; w^T x + b, 1)

You can see, mean = w^T x + b and variance = 1

Now, the loss function corresponds to

-log N(y; w^T x + b, 1)

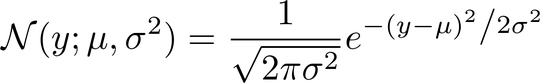

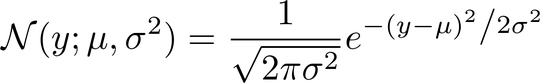

If we take a look at how the Gaussian N is defined, we see:

Now, take the negative logarithm of that. This results in:

We chose a fixed variance of 1. This makes the first term constant and reduces the second term to:

0.5 (y - mean)^2

Now, remember that we defined the mean as w^T x + b. Since the first term is constant, minimizing the negative logarithm of the Gaussian corresponds to minimizing

(y - w^T x + b)^2

which corresponds to minimizing the Mean Squared Error.