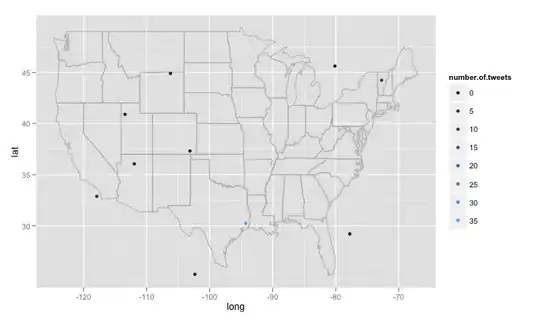

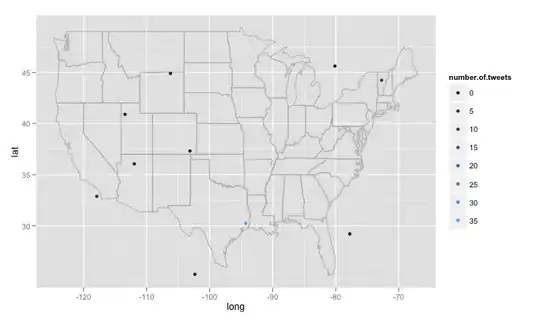

Ive been tinkering around with an R function, you enter in the search text, the number of search sites, and the radius around each site. For example twitterMap("#rstats",10,"10mi")here's the code:

twitterMap <- function(searchtext,locations,radius){

require(ggplot2)

require(maps)

require(twitteR)

#radius from randomly chosen location

radius=radius

lat<-runif(n=locations,min=24.446667, max=49.384472)

long<-runif(n=locations,min=-124.733056, max=-66.949778)

#generate data fram with random longitude, latitude and chosen radius

coordinates<-as.data.frame(cbind(lat,long,radius))

coordinates$lat<-lat

coordinates$long<-long

#create a string of the lat, long, and radius for entry into searchTwitter()

for(i in 1:length(coordinates$lat)){

coordinates$search.twitter.entry[i]<-toString(c(coordinates$lat[i],

coordinates$long[i],radius))

}

# take out spaces in the string

coordinates$search.twitter.entry<-gsub(" ","", coordinates$search.twitter.entry ,

fixed=TRUE)

#Search twitter at each location, check how many tweets and put into dataframe

for(i in 1:length(coordinates$lat)){

coordinates$number.of.tweets[i]<-

length(searchTwitter(searchString=searchtext,n=1000,geocode=coordinates$search.twitter.entry[i]))

}

#making the US map

all_states <- map_data("state")

#plot all points on the map

p <- ggplot()

p <- p + geom_polygon( data=all_states, aes(x=long, y=lat, group = group),colour="grey", fill=NA )

p<-p + geom_point( data=coordinates, aes(x=long, y=lat,color=number.of.tweets

)) + scale_size(name="# of tweets")

p

}

# Example

searchTwitter("dolphin",15,"10mi")

There are some big problems I've encountered that I'm not sure how to deal with. First, as written the code searches 15 different randomly generated locations, these locations are generated from a uniform distribution from the maximum longitude east in the US to the maximum west, and the latitude furthest north to the furthest south. This will include locations not in the united states, say just east of lake of the woods minnesota in Canada. I'd like a function that randomly checks to see if the generated location is in the US and discard it if it isn't. More importantly, I'd like to search thousands of locations, but twitter doesn't like that and gives me an 420 error enhance your calm. So perhaps it's best to search every few hours and slowly build a database and delete duplicate tweets. Finally, if one chooses a remotely popular topic, R gives an error like Error in function (type, msg, asError = TRUE) :

transfer closed with 43756 bytes remaining to read. I'm a bit mystified at how to get around this problem.