Inside an OpenMP parallel region, all the threads execute the same code (as in MPI), and the work is only split when the threads reach a loop/section/task.

In your example, the work inside the loops (OMP DO) is distributed among the available threads. After the loop is done, an implicit barrier synchronizes all the threads and then they execute in parallel the function DGTSV. After the subroutine has returned, the loop is split again.

@HristoIliev proposed using a OMP SINGLE clause. This restricts the piece of code inside to be executed by only one thread and forces all the other threads to wait for it (unless you specify nowait).

On the other hand, nested parallelism is called to the case where you declare a parallel region inside another parallel region. This also applies when you perform calls to a OpenMP parallelized library inside a parallel region.

By default, OpenMP does not increase parallelism nested parallel regions, instead, only the thread that enter the parallel region is able to execute it. This behavior can be changed using the environment variable OMP_NESTED to true.

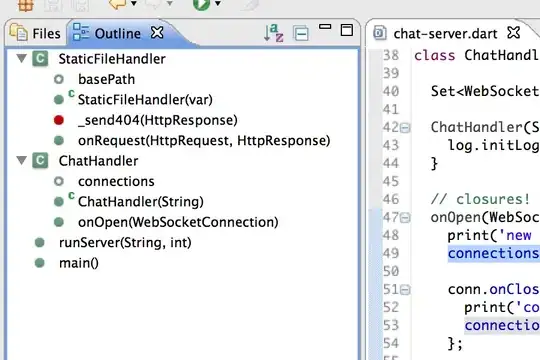

The OMP SINGLE solution is far better than splitting the parallel region in two, as the resources are reused for the next loop:

$!OMP PARALLEL

$!OMP DO

DO ...

END DO

$!OMP SINGLE

CALL DGTSV(...)

$!OMP DO

DO ...

END DO

$!OMP END PARALLEL

To illustrate the usage of OMP_NESTED I'll show you some results I had from an application which used FFTW (a Fast Fourier Transform implementation) configured to use OpenMP. The execution was performed in a 16 core two-socket Intel Xeon E5 @2.46GHz node.

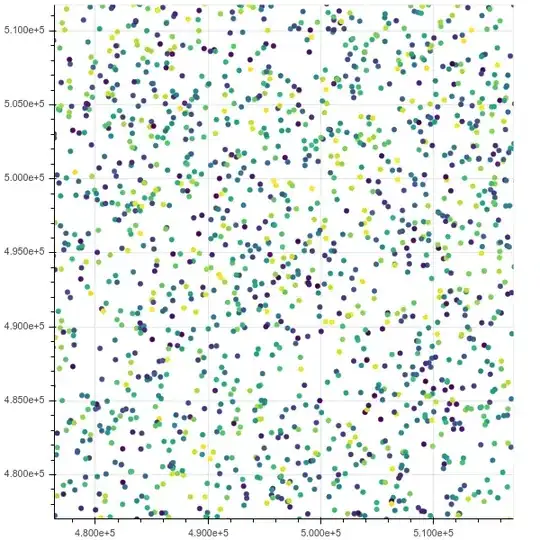

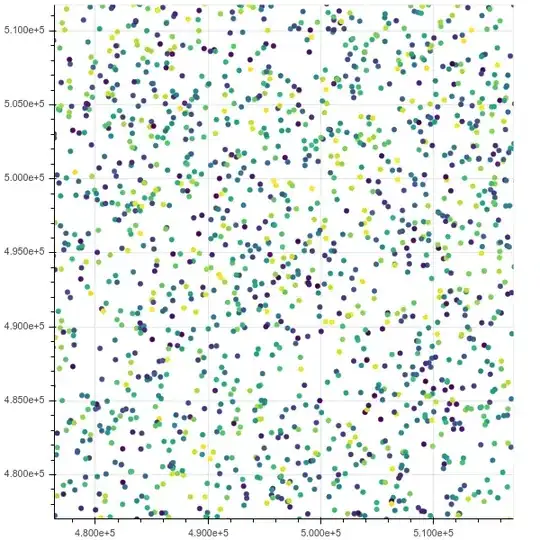

The following graphs show the amount of time spent in the whole application, where parallel regions appear when CPUs > 1, serialized regions when CPUs = 1 and synchronization regions when CPUs = 0.

The application is embarrassingly parallel, so in this particular case using nesting is not worthwhile (FFTW does not scale that good).

This is the OMP_NESTED=false execution. Observe how the amount of parallelism is limited by the amount of threads spent in the external parallel region (ftdock).

This is the OMP_NESTED=true execution. In this case, it is possible to increase parallelism further than the amount of threads spent on the external parallel region. The maximum parallelism possible in this case is 16, when either the 8 external threads create a single peer to execute the internal parallel region or they are 4 creating 3 additional threads each (8x2 = 4x4 = 16).