There will be no difference as you can test yourself by inspecting the execution plans. If id is the clustered index, you should see an ordered clustered index scan; if it is not indexed, you'll still see either a table scan or a clustered index scan, but it won't be ordered in either case.

The TOP 1 approach can be useful if you want to pull along other values from the row, which is easier than pulling the max in a subquery and then joining. If you want other values from the row, you need to dictate how to deal with ties in both cases.

Having said that, there are some scenarios where the plan can be different, so it is important to test depending on whether the column is indexed and whether or not it is monotonically increasing. I created a simple table and inserted 50000 rows:

CREATE TABLE dbo.x

(

a INT, b INT, c INT, d INT,

e DATETIME, f DATETIME, g DATETIME, h DATETIME

);

CREATE UNIQUE CLUSTERED INDEX a ON dbo.x(a);

CREATE INDEX b ON dbo.x(b)

CREATE INDEX e ON dbo.x(e);

CREATE INDEX f ON dbo.x(f);

INSERT dbo.x(a, b, c, d, e, f, g, h)

SELECT

n.rn, -- ints monotonically increasing

n.a, -- ints in random order

n.rn,

n.a,

DATEADD(DAY, n.rn/100, '20100101'), -- dates monotonically increasing

DATEADD(DAY, -n.a % 1000, '20120101'), -- dates in random order

DATEADD(DAY, n.rn/100, '20100101'),

DATEADD(DAY, -n.a % 1000, '20120101')

FROM

(

SELECT TOP (50000)

(ABS(s1.[object_id]) % 10000) + 1,

rn = ROW_NUMBER() OVER (ORDER BY s2.[object_id])

FROM sys.all_objects AS s1

CROSS JOIN sys.all_objects AS s2

) AS n(a,rn);

GO

On my system this created values in a/c from 1 to 50000, b/d between 3 and 9994, e/g from 2010-01-01 through 2011-05-16, and f/h from 2009-04-28 through 2012-01-01.

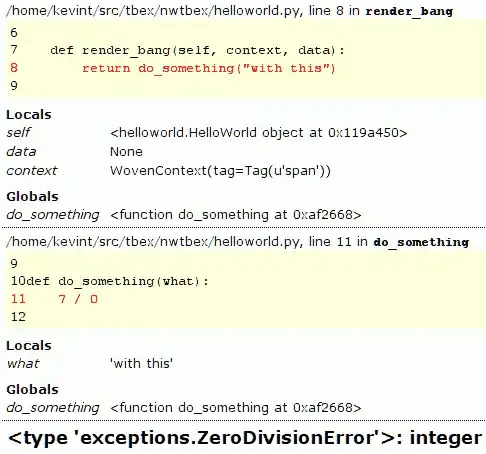

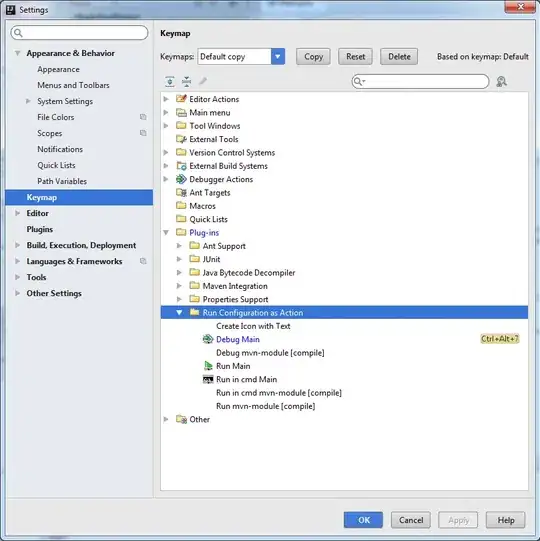

First, let's compare the indexed monotonically increasing integer columns, a and c. a has a clustered index, c does not:

SELECT MAX(a) FROM dbo.x;

SELECT TOP (1) a FROM dbo.x ORDER BY a DESC;

SELECT MAX(c) FROM dbo.x;

SELECT TOP (1) c FROM dbo.x ORDER BY c DESC;

Results:

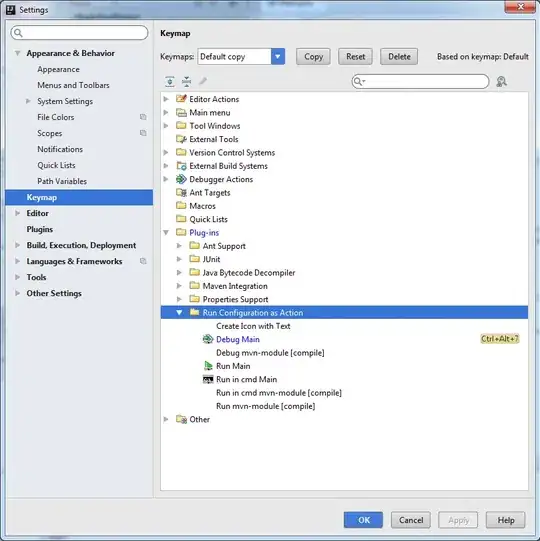

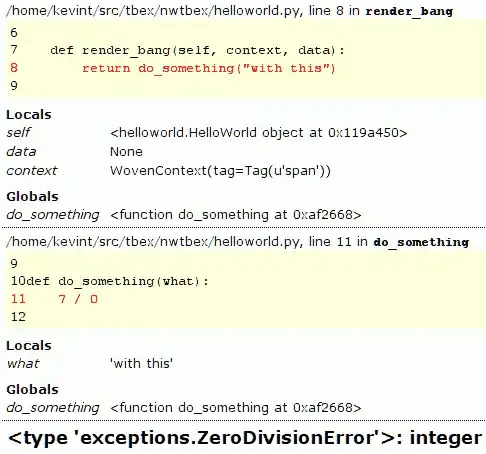

The big problem with the 4th query is that, unlike MAX, it requires a sort. Here is 3 compared to 4:

This will be a common problem across all of these query variations: a MAX against an unindexed column will be able to piggy-back on the clustered index scan and perform a stream aggregate, while TOP 1 needs to perform a sort which is going to be more expensive.

I did test and saw the exact same results across testing b+d, e+g, and f+h.

So it seems to me that, in addition to producing more standards-compliance code, there is a potential performance benefit to using MAX in favor of TOP 1 depending on the underlying table and indexes (which can change after you've put your code in production). So I would say that, without further information, MAX is preferable.

(And as I said before, TOP 1 might really be the behavior you're after, if you're pulling additional columns. You'll want to test MAX + JOIN methods as well if that's what you're after.)