THE GIST

Before performing a massive 40+ GB import of the English Wikipedia, I had to temporarily remove indexes and auto-increment fields from three tables ('page', 'revision', and 'text') to handle the load. Now I have finally successfully imported the English Wikipedia to my local machine and created a local mirror (MediaWiki API). Yay!

However, I now need to re-create the indexes and auto-increment fields in less than a decade. Luckily, (1) I took plenty of screen-shots of the relevant tables in phpmyadmin before I removed the indexes and fields; (2) I can explain with extreme precision the steps I took before the import; and (3) this shouldn't be too difficult for anyone fluent in MySQL. Unfortunately, I have no expertise in MySQL whatsoever, so "baby steps" explanations would be extremely helpful.

PRECISELY WHAT I DID (PREPARING FOR THE IMPORT):

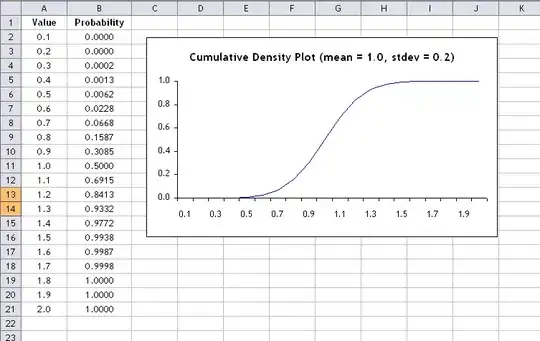

Steps 1, 2, 3: This image depicts the table page before I modified the field page_id by clicking 'Change' and un-checking 'Auto-Increment' (in preparation for importing). I performed precisely the same modifications for the field rev_id in table revision and old_id in table text but omitted the screen-shots to avoid redundancy.

Step 4: This image depicts the indexes for the table page before I dropped all of them.

Step 5: This image depicts the indexes for the table revision before I dropped all of them.

Step 6: This image depicts the indexes for the table text before I dropped all of them.

WHAT I NEED NOW (RESTORING AFTER THE IMPORT):

I just need to restore the original indexes and auto-increment fields without waiting a hundred years.

Set-up details: PHP 5.3.8 (apache2handler), MySQL 5.5.16 (InnoDB), Apache 2.2.21, Ubuntu 12.04 LTS, MediaWiki 1.19.0 (private wiki)