Consider this sequential procedure on a data structure containing collections (for simplicity, call them lists) of Doubles. For as long as I feel like, do:

- Select two different lists from the structure at random

- Calculate a statistic based on those lists

- Flip a coin based on that statistic

- Possibly modify one of the lists, based on the outcome of the coin toss

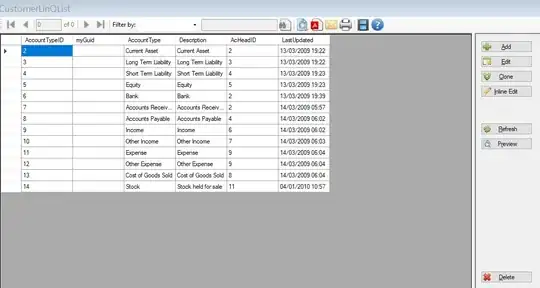

The goal is to eventually achieve convergence to something, so the 'solution' is linear in the number of iterations. An implementation of this procedure can be seen in the SO question here, and here is an intuitive visualization:

It seems that this procedure could be better performed - that is, convergence could be achieved faster - by using several workers executing concurrently on separate OS threads, ex:

I guess a perfectly-realized implementation of this should be able to achieve a solution in O(n/P) time, for P the number of available compute resources.

Reading up on Haskell concurrency has left my head spinning with terms like MVar, TVar, TChan, acid-state, etc. What seems clear is that a concurrent implementation of this procedure would look very different from the one I linked above. But, the procedure itself seems to essentially be a pretty tame algorithm on what is essentially an in-memory database, which is a problem that I'm sure somebody has come across before.

I'm guessing I will have to use some kind of mutable, concurrent data structure that supports decent random access (that is, to random idle elements) & modification. I am getting a bit lost when I try to piece together all the things that this might require with a view towards improving performance (STM seems dubious, for example).

What data structures, concurrency concepts, etc. are suitable for this kind of task, if the goal is a performance boost over a sequential implementation?