If you can't run code on the server or execute requests then, no, you can't do this. You will have to download the file to a server or computer that you own and upload from there.

You can see the operations you can perform on amazon S3 at http://docs.amazonwebservices.com/AmazonS3/latest/API/APIRest.html

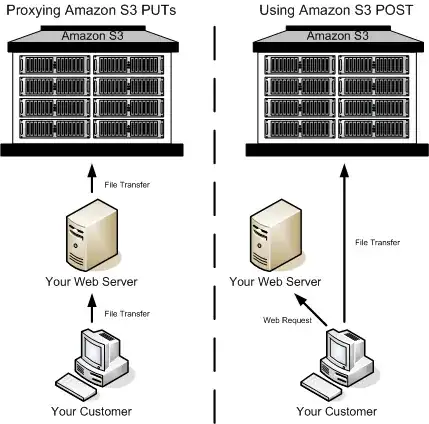

Checking the operations for both the REST and SOAP APIs you'll see there's no way to give Amazon S3 a remote URL and have it grab the object for you. All of the PUT requests require the object's data to be provided as part of the request. Meaning the server or computer that is initiating the web request needs to have the data.

I have had a similar problem in the past where I wanted to download my users' Facebook Thumbnails and upload them to S3 for use on my site. The way I did it was to download the image from Facebook into Memory on my server, then upload to Amazon S3 - the full thing took under 2 seconds. After the upload to S3 was complete, write the bucket/key to a database.

Unfortunately there's no other way to do it.