Disclaimer: Not homework!

Problem

I've been reading up on BCC error detection for my networks course and have got a bit confused over one particular explanation in some of the slides.

Given Information

We are provided the following explanation:

| r |m6 |m5 |m4 |m3 |m2 |m1 |m0

------------------------------------

w0 | 0 | 1 | 0 | 0 | 1 | 0 | 0 | 0

w1 | 0 | 1 | 1 | 0 | 0 | 1 | 0 | 1

w2 | 0 | 1 | 1 | 0 | 1 | 1 | 1 | 1

w3 | 0 | 1 | 1 | 0 | 1 | 1 | 1 | 1

w4 | 0 | 1 | 1 | 0 | 1 | 1 | 1 | 1

-----------------------------------

BCC | 0 | 1 | 0 | 0 | 0 | 0 | 1 | 0

- Let n = row length (n=8 in this case)

- Remember, not all bits in a burst need be in error, just the first and last

- BCC copes with (n+1)-bit bursts (9-bit bursts in this case)

Question

Could someone please explain to me how this is the case/how it works?

Example Problem

(Seen in a past paper) For example given a diagram as above, how many burst bits can be reliably detected in a block? Explain your answer.

Any help greatly appreciated!

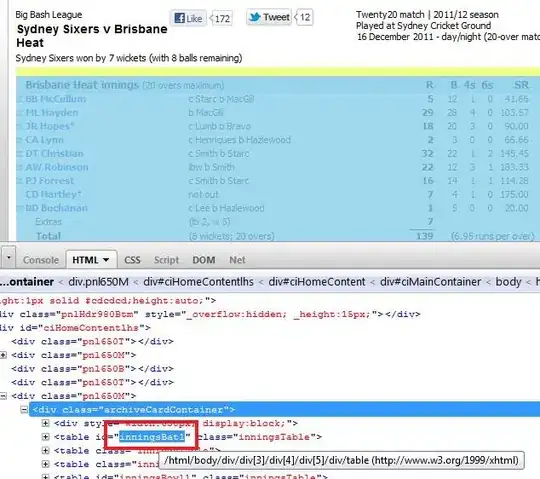

EDIT: Added reference slide