There are a number of questions/answers regarding how to get the pixel color of an image for a given point. However, all of these answers are really slow (100-500ms) for large images (even as small as 1000 x 1300, for example).

Most of the code samples out there draw to an image context. All of them take time when the actual draw takes place:

CGContextDrawImage(context, CGRectMake(0.0f, 0.0f, (CGFloat)width, (CGFloat)height), cgImage)

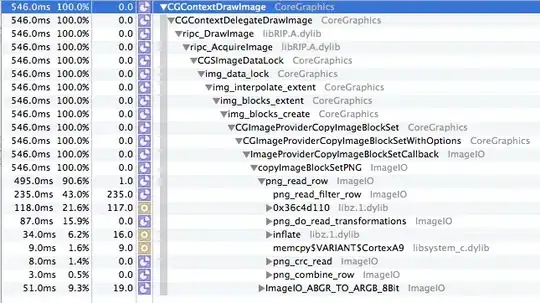

Examining this in Instruments reveals that the draw is being done by copying the data from the source image:

I have even tried a different means of getting at the data, hoping that getting to the bytes themselves would actually prove much more efficient.

NSInteger pointX = trunc(point.x);

NSInteger pointY = trunc(point.y);

CGImageRef cgImage = CGImageCreateWithImageInRect(self.CGImage,

CGRectMake(pointX * self.scale,

pointY * self.scale,

1.0f,

1.0f));

CGDataProviderRef provider = CGImageGetDataProvider(cgImage);

CFDataRef data = CGDataProviderCopyData(provider);

CGImageRelease(cgImage);

UInt8* buffer = (UInt8*)CFDataGetBytePtr(data);

CGFloat red = (float)buffer[0] / 255.0f;

CGFloat green = (float)buffer[1] / 255.0f;

CGFloat blue = (float)buffer[2] / 255.0f;

CGFloat alpha = (float)buffer[3] / 255.0f;

CFRelease(data);

UIColor *pixelColor = [UIColor colorWithRed:red green:green blue:blue alpha:alpha];

return pixelColor;

This method takes it's time on the data copy:

CFDataRef data = CGDataProviderCopyData(provider);

It would appear that it too is reading the data from disk, instead of the CGImage instance I am creating:

Now, this method, in some informal testing does perform better, but it is still not as fast I want it to be. Does anyone know of an even faster way of getting the underlying pixel data???