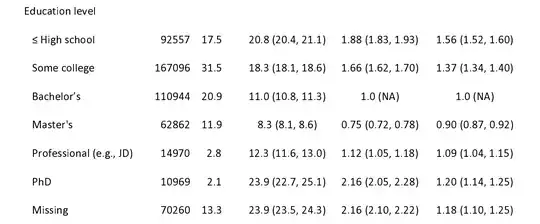

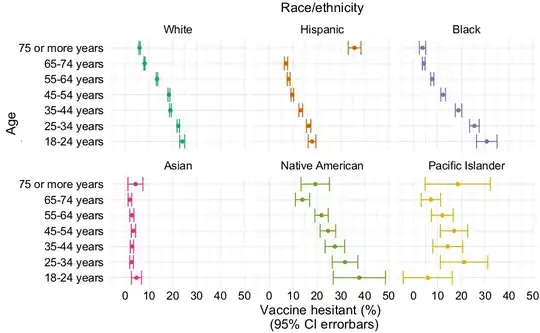

The graph accurately represents the survey result but the survey cannot be taken as an accurate representation of the true position.

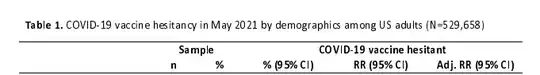

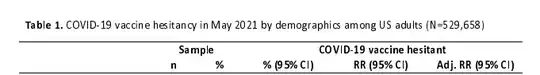

If you follow the link to the paper that Unherd has you'll see the following data on page 17:

The fourth column is "COVID-19 vaccine hesitant % (95% CI)".

The data itself came from an online survey via Facebook:

This analysis used the COVID Trends and Impact Survey (CTIS)9, created by the Delphi Group at Carnegie Mellon University (CMU) and conducted in collaboration with Facebook Data for Good.

It appears they've used a subset of the data for their paper (as a side note the January 6th start date does coincide with an update made to the survey):

The analysis sample includes 5,121,436 survey responses from participants who completed the survey at least once January 6 to May 31, 2021

The survey itself does have some published limitations. Looking through those, there are two caveats I find noteworthy. The first is the second sample limitation:

Non-response bias. Only a small fraction of invited users choose to take the survey when they are invited. If their decision on whether to take the survey is random, this is not a problem. However, their decision to take the survey may be correlated with other factors—such as their level of concern about COVID-19 or their trust of academic researchers. If that is the case, the sample will disproportionately contain people with certain attitudes and beliefs. This implies the potential for the sample to be unrepresentative of the US population as all self-selected surveys inevitable are.

The second caveat is from the section about response behavior:

While less than 1% of respondents opt to self-describe their own gender, a large percentage of respondents who do choose that option provide a description that is actually a protest against the question or the survey; for example, making trans-phobic comments or reporting their gender identification as “Attack Helicopter”. Additionally, these respondents disproportionately select specific demographic groups, such as having a PhD, being over age 75, and being Hispanic, all at rates far exceeding their overall presence in the US population, suggesting that people who want to disrupt the survey also pick on specific groups to troll.

The first caveat indicates an unknown level of reliability about the views of the population, while the second indicates that the responses are clearly not representative. Because PhDs make up around 2% of the sample size, having even "less than 1% of respondents" attempt to disrupt the data for PhDs means we cannot be confident about the accuracy of such data as representing the population. As such we need to take the data for PhDs with a major grain of salt. It could still be useful to look at the data for PhDs over time, but any comparison with other groups cannot be trusted without further studies being done that reach the same conclusions.

A truly trustworthy analysis would need to randomly sample the population as self-selecting surveys can be very, very unrepresentative.