From this article. I don't like Breitbart as a source but I try hard to make sure I don't dismiss entirely their claims without reason. I'd like help interpreting their claims.

A German professor has confirmed what skeptics from Britain to the US have long suspected: that NASA’s Goddard Institute of Space Studies has largely invented “global warming” by tampering with the raw temperature data records.

Professor Dr. Friedrich Karl Ewert is a retired geologist and data computation expert. He has painstakingly examined and tabulated all NASA GISS’s temperature data series, taken from 1153 stations and going back to 1881. His conclusion: that if you look at the raw data, as opposed to NASA’s revisions, you’ll find that since 1940 the planet has been cooling, not warming.

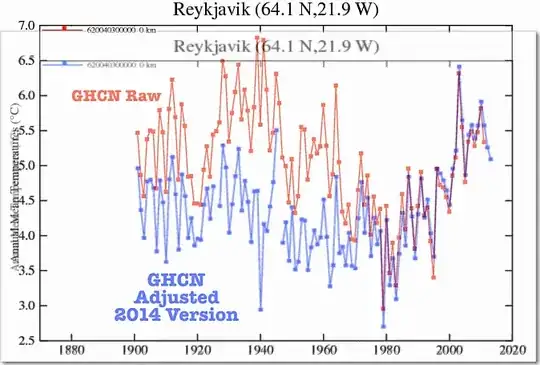

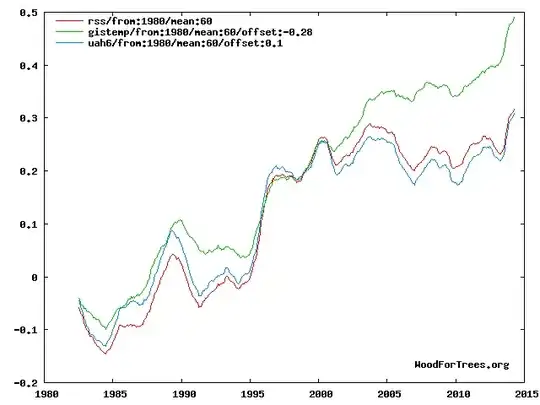

They cite, in part, this pair of graphs:

and

to show that NASA has been tampering with the historical record with bogus adjustments to create the illusion of warming rather than cooling.

Doing some digging, I'm a little disinclined to believe these claims. I created a version of the first chart that goes further back -

so you can see there's a warming trend in all three data series over the longer term. However, I would still like to know:

- (First graph) Why does the value of the green series deviate from the other two past 2000?

- (Second graph) Why were these adjustments made? Here is the source of the raw data for this chart.

I have a good knowledge of math and statistics but little in depth understanding of climate science so any leads are helpful (even if just pointers to good learned resources).