This will be long, so I'll include a summary at the end.

Harvard's Cooperative Congressional Election Study (http://projects.iq.harvard.edu/cces/home) is made up of multiple groups of researchers who query over 50,000 people before and after American elections. In the 2010 election survey*

The 2010 CCES involved 30 teams, yielding a Common Content sample of 55,400 cases. The subjects for this study were recruited during the fall of 2010. Each research team purchased a 1,000 person national sample survey, conducted in October and November of 2010 by YouGov/Polimetrix of Palo Alto, CA. Each survey has approximately 120 questions. For each survey of 1,000 persons, half of the questionnaire was developed and controlled entirely by each the individual research team, and half of the questionnaire is devoted to Common Content.

Source: CCES 2010 Guide.pdf Page 4, downloadable here: https://dataverse.harvard.edu/dataset.xhtml?persistentId=hdl:1902.1/17705

In 2014, Richman, Chattha, and Earnest published a paper in Electoral Studies examining the rate of non-citizens voting found by CCES:

Of 339 non-citizens identified in the 2008 survey, Catalyst matched 140 to a commercial (e.g. credit card) and/or voter database. The vote validation procedures are described in detail by Ansolabehere and Hersh (2012). The verification effort means that for a bit more than 40 percent of the 2008 sample, we are able to verify whether non-citizens voted when they said they did, or didn't vote when they said they didn't.

Source: "Do non-citizens vote in U.S. elections?", section 2. Data

In that paper, they estimate:

How many non-citizen votes were likely cast in 2008? Taking the most conservative estimate – those who both said they voted and cast a verified vote – yields a confidence interval based on sampling error between 0.2% and 2.8% for the portion of non-citizens participating in elections. Taking the least conservative measure – at least one indicator showed that the respondent voted – yields an estimate that between 7.9% and 14.7% percent of non-citizens voted in 2008. Since the adult non-citizen population of the United States was roughly 19.4 million (CPS, 2011), the number of non-citizen voters (including both uncertainty based on normally distributed sampling error, and the various combinations of verified and reported voting) could range from just over 38,000 at the very minimum to nearly 2.8 million at the maximum.

Source: "Do non-citizens vote in U.S. elections?", section 3.3 Voting

Two Principal Investigators of CCES and a representative of YouGov responded to the paper in late 2014:

Suppose a survey question is asked of 20,000 respondents, and that, of these persons, 19,500 have a given characteristic (e.g., are citizens) and 500 do not. Suppose that 99.9 percent of the time the survey question identifies correctly whether people have a given characteristic, and 0.1 percent of the time respondents who have a given characteristic incorrectly state that they do not have that characteristic. (That is, they check the wrong box by mistake.) That means, 99.9 percent of the time the question correctly classifies an individual as having a characteristic—such as being a citizen of the United States—and 0.1 percent of the time it classifies someone as not having a characteristic, when in fact they do. This rate of misclassification or measurement error is extremely low and would be tolerated by any survey researcher. It implies, however, that one expects 19 people out of 20,000 to be incorrectly classified as not having a given characteristic, when in fact they do.

Source: http://projects.iq.harvard.edu/cces/news/perils-cherry-picking-low-frequency-events-large-sample-surveys

Indeed, they go further to identify the rate at which we can expect citizens to be classified as non-citizens in the survey:

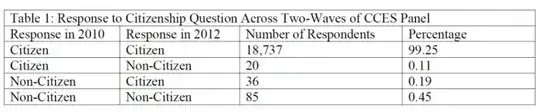

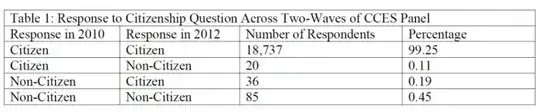

First, the citizenship classification in the CCES has a reliability rate of 99.9 percent. The citizenship question was asked in the 2010 and 2012 waves of a panel study conducted by CCES. Of those who stated that they were citizens in 2010, 99.9 percent stated that they were citizens in 2012, but 0.1 percent indicated on the 2012 survey form that they were non-citizen immigrants. This is a very high reliability rate and very low misclassification rate for self-identification questions. See Table 1.

Source: "The Perils of Cherry Picking Low Frequency Events in Large Sample Surveys"

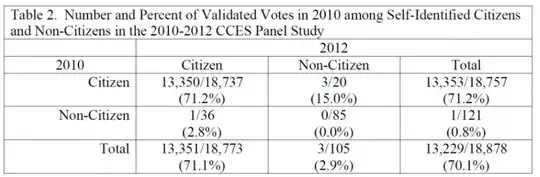

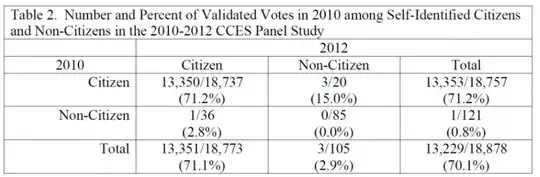

Third, the panel shows clear evidence that the respondents who were identified as non-citizen voters by Richman et al. were misclassified. Clearly misclassified observations are the 20 respondents who reported being citizens in 2010 and non-citizens in 2012. Of those 20 respondents, a total of 3 respondents are classified by Catalist as having voted in 2010. Additionally, exactly 1 person is estimated to have voted in 2010, having been a non-citizen in 2010 and a citizen in 2012. (Note: This might not be an error as the person could have legally become a citizen in the intervening two years.) Both of these categories might include some citizens who are incorrectly classified as non-citizens in one of the waves.

Importantly, the group with the lowest likelihood of classification errors consists of those who reported being non-citizens in both 2010 and 2012. In this set, 0 percent of respondents cast valid votes. That is, among the 85 respondents who reported being non-citizens in 2010 and non-citizens in 2012, there are 0 valid voters for 2010. 1

Source: "The Perils of Cherry Picking Low Frequency Events in Large Sample Surveys"

In summary:

- It is true that a certain number of respondents on a large election survey both reported that they had voted and they were not citizens.

- It is not true that the citizenship status of any of these people has been verified - it is entirely self-reported. Voting status is verified through Catalyst databases of voters.

- It is entirely possible that a tiny error rate of self-reported citizenship could create a very large and impressive number of supposed non-citizens who are voting, just through extrapolation from the data set. The number of people in the survey who reported that they were citizens in 2010, but were not citizens in 2012, indicates the viability of this explanation.

*I looked for a similar 2008 CCES guide and summary, but did not find it on the CCES website - it might improve this answer if anyone can find one.