In the 1975 software project management book, The Mythical Man Month: Essays on Software Engineering, Fred Brooks states that, no matter the programming language chosen, a professional developer will write an average 10 lines of code (LoC) per day.

Productivities in [the] range of 600-800 debugged instructions per man-year were experienced by control program groups. Productivities in the [range of] 2000-3000 debugged instructions per man-year were achieved by [OS/360] language translator groups. These include planning done by the group, coding component test, system test, and some support activities.

-Page 93 of "The Mythical Man Month" (1975)

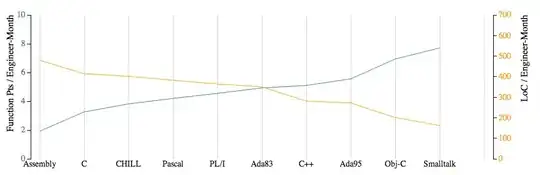

The book quotes other numbers too for other projects, saying e.g. that an O/S is more complicated and therefore slower to write than other types of software. However, a 2000 statements/year figure is virtually identical to the often-claimed 10 LoC/day. Further, it is on the nearly same page as the other part of the claim, which is that LoC/day seems independent of the programming language being used.

Productivity [in LoC] seems constant [across languages] in terms of elementary statements [so it's better to use a higher-level language if you can], a conclusion that is reasonable in terms of the thought a statement requires and the errors it may include.11

-Page 94 of "The Mythical Man Month" (1975)

This claim is still being made today (in 2006, at least). From Jeff Atwood's blog, Coding Horror:

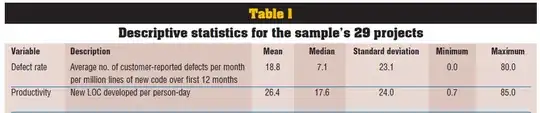

Project Size Lines of code (per year) COCOMO average 10,000 LOC 2,000 - 25,000 3,200 100,000 LOC 1,000 - 20,000 2,600 1,000,000 LOC 700 - 10,000 2,000 10,000,000 LOC 300 - 5,000 1,600

The COCOMO averages divided across 250 work days per year results in 6.4 to 12.8 LoC/day, encompassing the 10 LoC/day claim from the 1975 book.

Is it true that professional programmers produce code at around 10 LoC/day?