We have a strange issue in our network, which according to networkengineering.stackexchange is off-topic there, even though in my eyes it is a network problem.

We saw it the first time when we wanted to restore SQL databases to a test DB. The restore failed, in the windows log we saw iSCSI errors, the mounted iSCSI disk seems to lose the connection very often (Backup is restored with veeam - this mounts the backup file as iSCSI volume (target is physical Backup server, initiator is virtual SQL server)).

We did some testing, and it is not only a iSCSI issue, it happens when we copy files between physical servers and virtual servers. Our monitoring shows high errors during the copy process, the strange thing is that we do not see errors on the switch.

What we see on the switch port (switch is Netgear M5300) of the virtual server is "Packets Received > 1518 Octets" and "Packets Transmitted > 1518 Octets" goes through the roof when we copy large files. But "Packets RX and TX" larger then 1518 is 0. This happens only on the port of the ESX, not on the port of the other server in any test.

All ports (switch, vSwitch, portgroups, server interfaces) have the MTU set to default (1518 / 1500). We rebooted the backup server and the esx with all containing VMs, disabled and reenabled the switch ports. Wireshark on the sending server shows large packets (64kb), but according to the switch statistics this port only receives normal 1518 frames.

It seems to only happen with this one test esx, with all VMs we have on it, even if we upload files to the esx datastore.

I do not know anymore where to search. The only thing we did not yet reboot is the switch itself, since this is a core component in the network, we cannot do this during production time (and production is 24/7). We will try this on the weekend, but if anyone has a tip where to look at I would appreciate it.

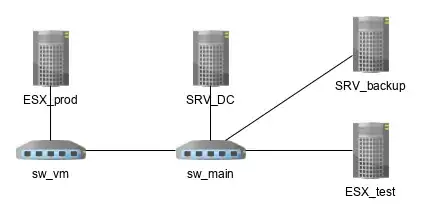

EDIT: for the sake of completeness a small topology:

EDIT2: Did some more tests: the errors are only on visible on uplink ports with multiple vlans on it. If I only use a single untagged vlan, there are no errors and no packets over 1518 anywhere.

If I now think about it, a packet with VLAN tag would have 1522 as size. But some switches do not care about this, some do - MTU is default everywhere. I do not want to stop using tagged VLANs with vmware... Any idea?