Background

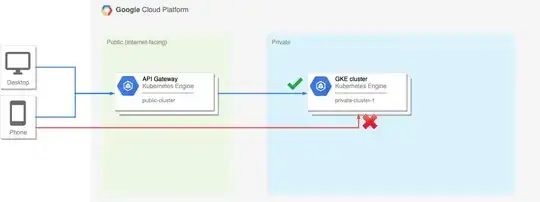

I have a GCP project with two GKE clusters: public-cluster and private-cluster. public-cluster runs an API gateway which performs centralized authentication, logging, rate-limiting etc and reroutes requests to backend microservices running on private-cluster. The API gateway is built using Ocelot.

public-cluster is internet-facing and uses ingress-nginx to expose a public external IP address which accepts HTTP/s traffic on ports 80/443.

The intention for private-cluster is that is can only accept port 80/443 traffic from public-cluster. We do not want this cluster accessible from anywhere else, i.e. no direct HTTP/s requests to private-cluster either from within the VPC or externally, unless the traffic is from public-cluster. I have exposed the services running on private-cluster using an internal load balancer, so each service has it's own internal IP. The API gateway uses these internal IPs to reroute inbound requests to the backend microservices.

public-cluster and private-cluster are on separate subnets within the same region within the same VPC.

The intended architecture can be seen here:

.

.

The problem

I am trying to create firewall rules which will block all traffic to the private-cluster unless it comes from the public-cluster, as follows:

- One ingress rule with a low priority which denies all traffic to

private-cluster(using the network tag as the target) and0.0.0.0/0as the source IP range - A higher priority ingress rule where:

- Target =

private-cluster - Source filter =

public-cluster - Allows TCP traffic on ports 80 and 443

- Target =

If I SSH onto a node within the public-cluster and fire curl requests to a service on the private-cluster (using the service's internal load balancer IP), the firewall rules above correctly allow the traffic. However, if I fire requests from my local machine to the public-cluster API Gateway, the firewall rules block the traffic. It seems like in this case the network tag in the firewall rule is being ignored.

I have tried a few things to get the rules working (all of which have been unsuccessful), such as:

- using the subnet IP range that public-cluster sits on as the source filter IP range

- using the subnet's gateway IP as the source filter IP

- using

public-cluster's nginx external IP as the source IP

Questions

So, my questions are:

- What is the correct way to define this firewall rule so that requests rerouted from the API gateway running on

public-clusterare allowed through the firewall toprivate-cluster? - More generally, is this a typical architecture pattern for Kubernetes clusters (i.e. having a public facing cluster running an API gateway which reroutes requests to a backend non-public facing cluster) and, if not, is there a better way to architect this? (I appreciate this is a very subjective question but I am interested to hear about alternative approaches)