Having 2 guest Windows Server 2016 OSes hosted by Hyper-V Server 2016. The guest OS cluster is very unreliable and one of the nodes constantly gets quarantined (multiple times per day).

I also have Windows Server 2012R2 cluster. Those are hosted by same Hyper-V hosts and have no issues whatsoever. That means I have the same networking and hyper-v infrastructure in-between 2012R2 as well as 2016.

Further config for 2016 hosts:

- In Network Connections, TCP/IPv6 is unchecked for all adapters. I'm aware that this actually doesn't disable IPv6 for Cluster as it uses hidden network adapter by NetFT and there it encapsulates IPv6 in IPv4 for heartbeats. I have the same configuration on good 2012R2 hosts.

- Although 2012R2 cluster worked like I wanted without Witness, I initially configured 2016 the same. Trying to troubleshoot these issues, I added File Share Witness to 2016 cluster - no change.

- Network Validation Report completes successfully

I know WHAT happens, but don't know WHY. The WHAT:

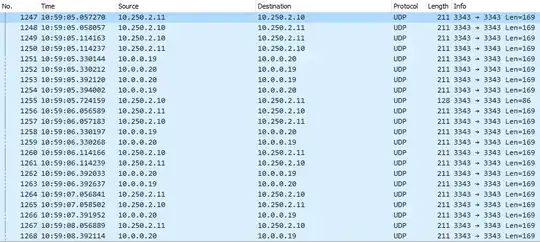

- Cluster plays ping-pong with heartbeat UDP packets via multiple interfaces between both nodes on port 3343. Packets get sent approx. each second.

- Suddenly 1 node stops playing ping-pong and doesn't respond. One node still tries to deliver heartbeat.

- Well, I read cluster logfile to find out that the node removed routing info knowledge:

000026d0.000028b0::2019/06/20-10:58:06.832 ERR [CHANNEL fe80::7902:e234:93bd:db76%6:~3343~]/recv: Failed to retrieve the results of overlapped I/O: 10060

000026d0.000028b0::2019/06/20-10:58:06.909 ERR [NODE] Node 1: Connection to Node 2 is broken. Reason (10060)' because of 'channel to remote endpoint fe80::7902:e234:93bd:db76%6:~3343~ has failed with status 10060'

...

000026d0.000028b0::2019/06/20-10:58:06.909 WARN [NODE] Node 1: Initiating reconnect with n2.

000026d0.000028b0::2019/06/20-10:58:06.909 INFO [MQ-...SQL2] Pausing

000026d0.000028b0::2019/06/20-10:58:06.910 INFO [Reconnector-...SQL2] Reconnector from epoch 1 to epoch 2 waited 00.000 so far.

000026d0.00000900::2019/06/20-10:58:08.910 INFO [Reconnector-...SQL2] Reconnector from epoch 1 to epoch 2 waited 02.000 so far.

000026d0.00002210::2019/06/20-10:58:10.910 INFO [Reconnector-...SQL2] Reconnector from epoch 1 to epoch 2 waited 04.000 so far.

000026d0.00002fc0::2019/06/20-10:58:12.910 INFO [Reconnector-...SQL2] Reconnector from epoch 1 to epoch 2 waited 06.000 so far.

...

000026d0.00001c54::2019/06/20-10:59:06.911 INFO [Reconnector-...SQL2] Reconnector from epoch 1 to epoch 2 waited 1:00.000 so far.

000026d0.00001c54::2019/06/20-10:59:06.911 WARN [Reconnector-...SQL2] Timed out, issuing failure report.

...

000026d0.00001aa4::2019/06/20-10:59:06.939 INFO [RouteDb] Cleaning all routes for route (virtual) local fe80::e087:77ce:57b4:e56c:~0~ to remote fe80::7902:e234:93bd:db76:~0~

000026d0.00001aa4::2019/06/20-10:59:06.939 INFO <realLocal>10.250.2.10:~3343~</realLocal>

000026d0.00001aa4::2019/06/20-10:59:06.939 INFO <realRemote>10.250.2.11:~3343~</realRemote>

000026d0.00001aa4::2019/06/20-10:59:06.939 INFO <virtualLocal>fe80::e087:77ce:57b4:e56c:~0~</virtualLocal>

000026d0.00001aa4::2019/06/20-10:59:06.939 INFO <virtualRemote>fe80::7902:e234:93bd:db76:~0~</virtualRemote>

Now the WHY part... Why does it do that? I don't know. Note that a minute earlyer it complains: Failed to retrieve the results of overlapped I/O. But

I can still see UDP packets being sent/received

until the route was removed at 10:59:06 and only 1 node pings, but have no pongs. As seen in wireshark, there is no IP 10.0.0.19 and 10.250.2.10 in source column.

The route is re-added after some ~35 seconds, but that doesn't help - the node is already quarantined for 3 hours.

What am I missing here?