We have a server with 8 core CPU and 32 gigs RAM. About 1200 active users. We use GitLab rake task for the backups..

sudo gitlab-rake gitlab:backup:create

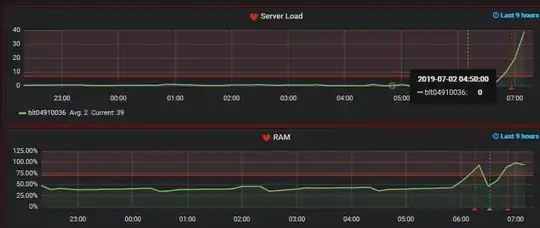

This process takes around 80 minutes and GitLab is unsuable during this time intermittently as the CPU and RAM are completely full. See the below image.

Load average at 40 and RAM consumption 100 percent.

The CPU/RAM is consumed fully when the final tar is getting created. The size of our backups is going to increase each passing day. Is there a way to optimize the performance of this job? The final tar size is 17496780800 bytes (17.5GB).

IOSTAT command output when job is running:

Linux 3.10.0-957.10.1.el7.x86_64 (abcdefghi) 02/07/19 _x86_64_ (8 CPU)

avg-cpu: %user %nice %system %iowait %steal %idle

5.01 0.00 2.05 3.14 0.00 89.80

Device: rrqm/s wrqm/s r/s w/s rMB/s wMB/s avgrq-sz avgqu-sz await r_await w_await svctm %util

fd0 0.00 0.00 0.00 0.00 0.00 0.00 8.00 0.00 42.20 42.20 0.00 42.20 0.00

sda 0.04 0.27 1.88 26.14 0.10 0.32 30.77 0.24 8.41 4.42 8.70 0.14 0.38

sdc 0.00 0.36 0.28 0.56 0.00 0.03 79.07 0.00 0.72 0.83 0.67 0.43 0.04

sdd 0.01 0.40 2.51 1.19 0.08 0.11 107.56 0.01 2.23 2.85 0.90 0.63 0.23

sdb 0.01 0.49 0.01 0.14 0.00 0.00 38.10 0.00 0.54 0.27 0.57 0.39 0.01

dm-0 0.00 0.00 0.44 0.02 0.03 0.00 122.65 0.00 2.26 2.34 0.45 0.81 0.04

dm-1 0.00 0.00 0.03 0.63 0.00 0.00 8.68 0.00 1.63 0.53 1.68 0.09 0.01

dm-2 0.00 0.00 0.01 0.41 0.00 0.02 85.97 0.00 1.13 0.64 1.15 0.42 0.02

dm-3 0.00 0.00 1.18 24.94 0.07 0.29 28.24 0.23 8.98 6.22 9.11 0.12 0.32

dm-4 0.00 0.00 0.25 0.65 0.01 0.00 26.20 0.00 1.28 1.47 1.21 0.34 0.03

dm-5 0.00 0.00 0.02 0.00 0.00 0.00 34.01 0.00 2.21 1.17 233.00 1.40 0.00

dm-6 0.00 0.00 0.02 0.37 0.00 0.00 15.53 0.00 1.97 4.37 1.87 0.32 0.01

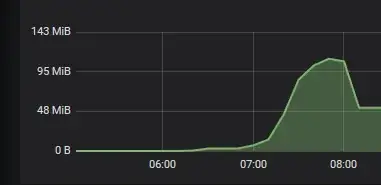

SWAP WHEN THE JOB IS RUNNING