I pretty much followed the same general idea as here: Resizing a partition

-Resize VMware disk: Through vSphere, resize disk from 100GB to 300GB

(Reboot VM)

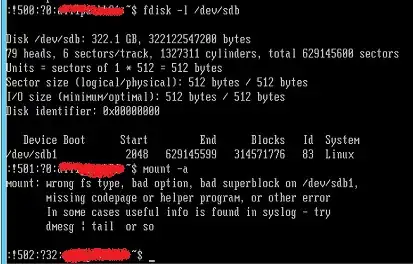

-Delete partition

(fdisk /dev/sdb, d, 1)

-Recreate partition

(While still in the same fdisk session with /dev/sdb, n, p, 1, <defaults>)

(Reboot VM)

Unfortunately, now the XFS FS will no longer mount.

I'm basically getting a "bad superblock" error. What I'm looking around is where does the SB actually reside? Is it in a partition or at the very beginning of the disk?

Now:

When I try an xfs_repair -n, it scans for quite some time and eventually gives up.

xfs_repair -n /dev/sdb1

Phase 1 - find and verify superblock...

bad primary superblock - bad magic number !!!

attempting to find secondary superblock...

.....<> .....

found candidate secondary superblock

unable to verify superblock continuing...

.....<> .....

Sorry, could not find valid secondary superblock

Existing now.

When I deleted and recreated the partition, should I have noted the starting position? What I now notice is that partition 1 seems to default to a start of 2048, but what I noticed on similar systems, is a start of 63.

Yes, I didn't think that recording the start of the old partition before deleting it was important. It never came up in all of my recent searching and it is perhaps the key here.

Perhaps my original superblock is in the 63-2048 range? I've copied the VM so that I can try a few things without toying too much with the original VM. Unfortunately, that copy was taken after I broke the original.

UFS Explorer https://www.ufsexplorer.com/ufs-explorer-standard-recovery.php, which came up during searches, sees the XFS file system and seemingly all its contents (via a scan of the VMDK).