We're in the process of migrating ~500gb of data from our end-of-life, in-house server to a new Citrix-based hosted DFS.

Our provider has been citing issues for some time trying to sync the data, saying that everytime a user changes a file, the sync has to be done again.

We've migrated the bulk of the data but we are struggling with our "Client" folders where most of our work is being done. These contain the usual office files, PDFs etc. but also files from applications such as Sage (accounting software).

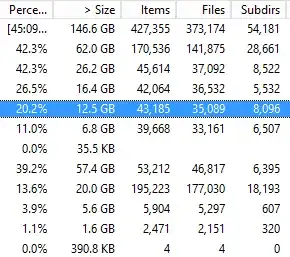

I'm currently running a DirStat scan on one of our mapped drives and it's ~30% completed and we have over 500,000 files from 1kb up to maybe 250mb max (many, many small ones, particularly for Sage).

If you extrapolate this would suggest over 1million which is a lot, but we are a very small business compared to others so we can't be the only company having this issue.

My question: is this a recognised problem when migrating from in-house to cloud using a mirrored sync, or are we missing something?

Sorry I don't have more specifics - I'm just our in-house IT guy relaying between the provider and the rest of the company, so my terminology is likely off.

My understanding is for every single file, our new cloud server has to connect to our existing server, copy the file, close the connection, move on to the next file. I can see this being very time-consuming, but really I don't know what else we can do to speed up the process?

Thanks for any advice.