I have an Intel 760P NVME drive hooked up to a Supermicro X11SRM-F with a Xeon W-2155 and 64GB of ddr4-2400 RAM. The specs for this drive claim 205K-265K IOPS (whatever 8GB span means) with about 3G/s random-read and 1.3G/s random write.

I've tried using this drive under an LVM layer, as well as a bare partition and just can't get anywhere near the advertised performance out of it.

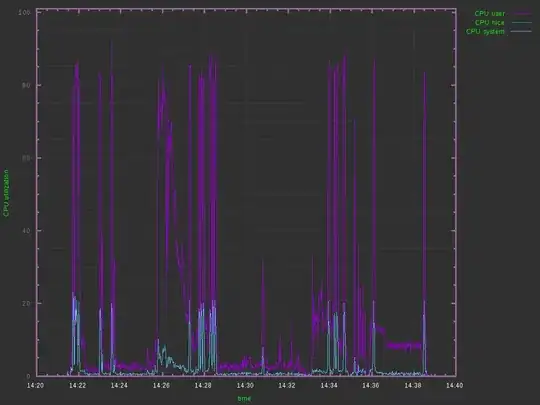

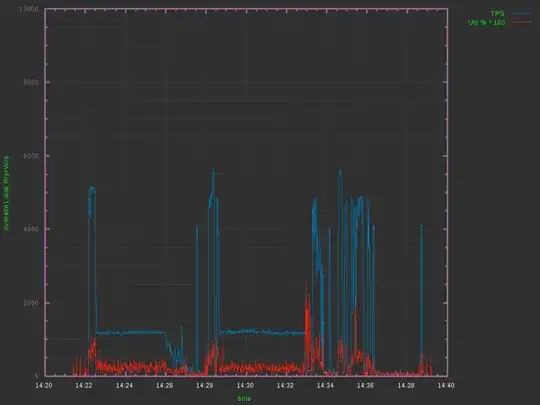

Running a typical process on the drive yields (via iostat) about 75MB/s write, with about 5K TPS (IOPS). iostat also shows disk utilization around 20% (graphs attached below) so it seems something is still bottlenecking somewhere. A regular Intel SSD over a SATA cable will outperform the drive at this point. Any ideas what to look at?

UPDATE:

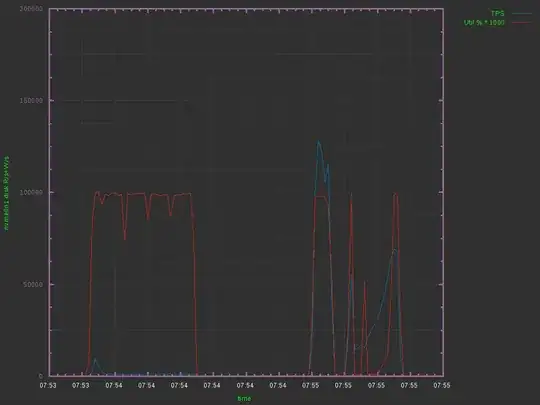

As mentioned by @John Mahowald - Seems to be application (Ruby) bottleneck!? graphs below from this fio command script (had to bump-up the graph scaling.. ~700MB/s writes and well over 50K TPS:

# full write pass

fio --name=writefile --size=10G --filesize=80G \

--filename=disk_test.bin --bs=1M --nrfiles=1 \

--direct=1 --sync=0 --randrepeat=0 --rw=write --refill_buffers --end_fsync=1 \

--iodepth=200 --ioengine=libaio

# rand read

fio --time_based --name=benchmark --size=80G --runtime=30 \

--filename=disk_test.bin --ioengine=libaio --randrepeat=0 \

--iodepth=128 --direct=1 --invalidate=1 --verify=0 --verify_fatal=0 \

--numjobs=4 --rw=randread --blocksize=4k --group_reporting

# rand write

fio --time_based --name=benchmark --size=80G --runtime=30 \

--filename=disk_test.bin --ioengine=libaio --randrepeat=0 \

--iodepth=128 --direct=1 --invalidate=1 --verify=0 --verify_fatal=0 \

--numjobs=4 --rw=randwrite --blocksize=4k --group_reporting