Background

I got two ec2 instances running laravel app on an nginx server behind a load balancer. We had the brilliant idea of running stress testing on the instances using apache ab like so:

ab -c 350 -n 20000 -H "Accept:application/x.toters.v1+json" http://staging-load-balancer-2088007710.eu-west-1.elb.amazonaws.com/api?store_ids[]=223%26lat=33.883666%26lon=35.533966

This is ApacheBench, Version 2.3 <$Revision: 1706008 $>

Copyright 1996 Adam Twiss, Zeus Technology Ltd, http://www.zeustech.net/

Licensed to The Apache Software Foundation, http://www.apache.org/

Benchmarking staging-load-balancer-2088007710.eu-west-1.elb.amazonaws.com (be patient)

Completed 2000 requests

Completed 4000 requests

Completed 6000 requests

Completed 8000 requests

Completed 10000 requests

Completed 12000 requests

Completed 14000 requests

apr_socket_recv: Connection reset by peer (104)

Total of 15912 requests completed

so it seems like it threw the towel near the end, running the stress tests again just dies immediately.

Question

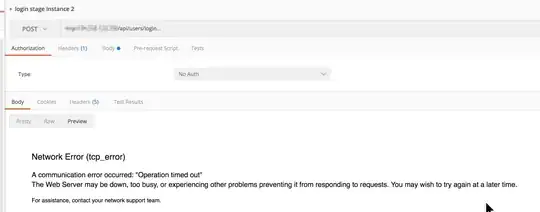

The problem is that now if we attempt to reach the instances (sidestepping the load balancer to isolate the problem) it just craps out, see the postman output:

and the nginx error logs show:

2017/11/28 13:27:41 [alert] 321#321: *120606 open socket #16 left in connection 163

2017/11/28 13:27:41 [alert] 321#321: *120629 open socket #34 left in connection 188

2017/11/28 13:27:41 [alert] 321#321: *120622 open socket #9 left in connection 213

2017/11/28 13:27:41 [alert] 321#321: *120628 open socket #25 left in connection 217

2017/11/28 13:27:41 [alert] 321#321: *120605 open socket #15 left in connection 244

2017/11/28 13:27:41 [alert] 321#321: *120614 open socket #41 left in connection 245

2017/11/28 13:27:41 [alert] 321#321: *120631 open socket #24 left in connection 255

2017/11/28 13:27:41 [alert] 321#321: *120616 open socket #23 left in connection 258

2017/11/28 13:27:41 [alert] 321#321: *120615 open socket #42 left in connection 269

2017/11/28 13:27:41 [alert] 321#321: aborting

restarting nginx didn't fix it, shutting down then turning on nginx didn't fix it. Ideas?