Im trying to determine which hardware causing the bottleneck of the server, the server is mainly used for serving video files on a heavy traffic site.

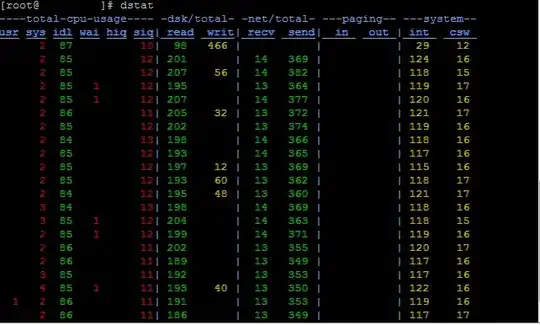

i have dstat output look like below (will get up to 500+ for send part on peak hour):

interface output as below showing max speed is 4000Mb/s:

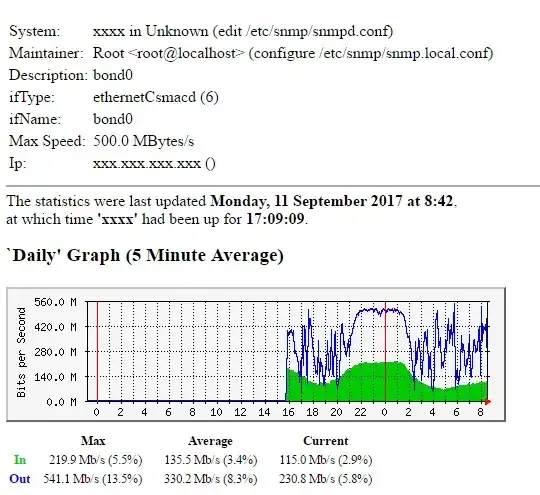

below is mrtg for daily showing 500Mbyets max speed:

The hardisk info using: smartctl -a /dev/sda

HDD info:

Vendor: DELL

Product: PERC H710P

Revision: 3.13

User Capacity: 1,999,307,276,288 bytes [1.99 TB]

Logical block size: 512 bytes

Logical Unit id: 0x6b82a720d22304002116d6c01027fc4d

Serial number: 004dfc2710c0d61621000423d220a782

Device type: disk

Local Time is: Mon Sep 11 09:06:33 2017 CST

Device does not support SMART

Error Counter logging not supported

Device does not support Self Test logging

how do i know which one is causing the bottleneck? using pure-ftp i always get listing directory failed, once i disable the nginx, i immediately able to list the directory. Now im not sure which is causing the bottleneck problem, either the max hdd data read or the network bandwidth, please help me so that i can decide to add a new hdd or add bandwidth.