I'm not completely certain what is being asked for, but I'll try to address each sections best I can.

Typically, a load balancing via nginx is setup like so: load balancer > web server > db server. That is, load balancer asks for the page from the web server and web server gets its data from the db server.

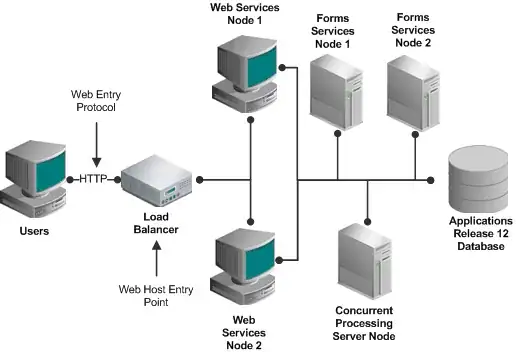

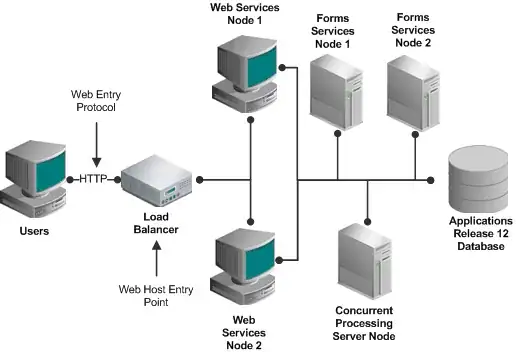

Logically, it would look like this (though it has more than you need)

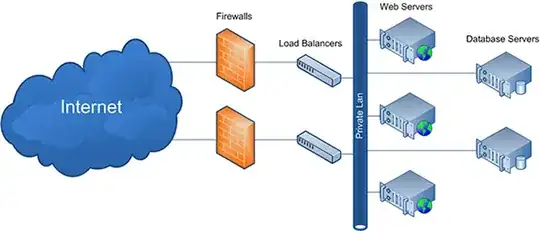

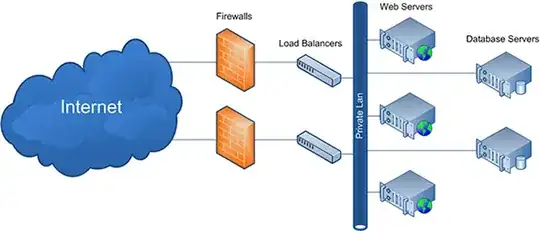

But how it's physically set up, it would look like this

So, it would be under the same vlan. You can set it up differently, but it's just the most common setup.

To the question of how to set up the configuration, digital-ocean actually has a guide on it already. https://www.digitalocean.com/community/tutorials/how-to-set-up-nginx-load-balancing

The most important part is the upstream. It defines which web server it's going to and with the weights, how much of it is being directed somewhere.

To the question of how much load the load balancer can handle depends on how much you ask of the load balancer. The rules in nginx can get quite complex and lengthy. There may even be scenarios where you have to write ugly if statements. You may have many customized scenarios for certain IPs. All these things that the configuration needs to go over will increase the load per request.

At the very simplest of configurations though, 100,000 requests/day is a minuscule amount for most servers to handle. Load balancing alone isn't do a whole lot of work. So it's entirely possible the smallest instance can easily handle 100k requests (assuming no bandwidth bottleneck) but I'm not familiar with digital ocean's packages to say with certainty.

So if you feel that running load balancer alone on the server is waste of its resources, you could certainly put more work on to it like redis. But that's entirely up to you.

You could even deploy a single/fewer bigger instance (or dedicated server) and run all these logical servers under fewer physical server. It would probably save money since it sounds like it's viable (I don't know your application and could be wrong).