I have the following situation: There is a set of machines in the network (NAS and other "servers"). There is one additional machine for backup purposes. It collects the data from all 4 machines on a regular basis using rsync and creates incremental backups. The backups are of pull-type and all scripts are run with ionice -c idle nice -19.

To oversee the stability of the whole system a monitoring system (munin) is installed on all Linux machines. Munin looks in 10 min intervals on different system variables and states and sends mails in case of problems/warnings.

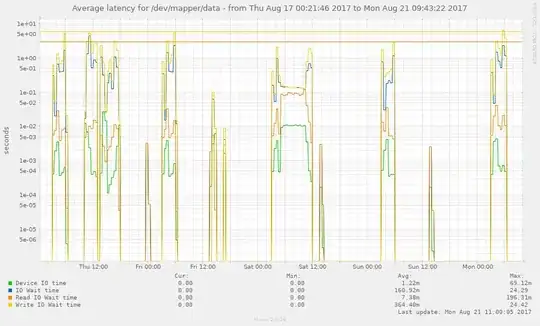

Each night at the end of a backup (especially after a long running backup of the largest machine) munin complains about high disk latencies.

I already pushed up the limits that are accepted, but still the IO wait at the end of such a backup is at 10sec or more. This seems quite high in my opinion.

I already pushed up the limits that are accepted, but still the IO wait at the end of such a backup is at 10sec or more. This seems quite high in my opinion.

The backup script is written by myself. I needed a similar approach as with the program rsnapshot but with minor modifications. Thus I created it myself (with much less functionality). In fact it rsyncs the remote machines to a temporary folder besides the other backups and then rotates/removes the old backups accordingly. According to my research so far the problem happens while writing the new backup (mainly hardlinking) or while the rotation/removal of the backups. I cannot tell exactly where the problem is as the granularity of munin is only 10 minutes.

The destination of the backups is located on a chain of abstraction layers: The Physical partitions are collected in a large RAID5 array (mdadm). The md device is used as a LVM PV. Inside the VG is a large partition (besides other unencrypted ones) which is encrypted using LUKS and inside that a second LVM resides and allows for assignment of storage to different partitions.

Any research in the net led mainly to issues with the networking connection and latency introduced at this layer. Although my backup is done via the network as well the issue here is the local performance on the backup server.

What I did so far:

- Reduce the dirty pages ratio to make the disk write earlier the data to avoid lags.

- Because most of the data is constant between runs a

--bwlimitwill not help as the hard links are created receiver-locally. Correct? - I thought if misaligned partitions or unmatched RAID chunk sizes might lead to this sort of problems. Although I do not know how to verify.

- The whole script is run from

cron. I added theionice/nicebut there was no big difference. - I installed

atopon the machine to look into the other processes. I do not see anything abnormal there (except for a 100% iowait CPU for most of the time during the end phases of the backups).

Now I would like to ask a few questions:

- Can anyone tell me what the problem might be?

- Could it only be a problem of the measurement? I mean there is no other load on the backup machine. Could it be the case that because no other processes are running the

rsyncprocess starves out any io action? So if there were another process it would be served first but as there is none, the disk is used at high rate and for thisrsyncprocess the write latency is so high (which is ok). - I have no idea anymore where to look for problems. Can you give me advice how to track down the problem further?

To make my statement clear: I am pretty aware that taking a backup will put quite some load on the system (especially on the disks) when it comes to writing the files/creating the links.

If you need further information please tell me what you need.