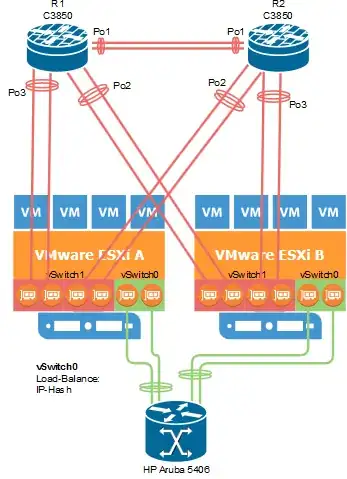

My ESXi hosts are connected to two switches as described in figure 1.

When i configure the Load-Balancing method of the trunks to "IP-Hash" the virtual hosts are flapping in between the portchannel-groups:

21-07-2017 09:00:45 Warning (4) SW_MATM-4-MACFLAP_NOTIF Host 0050.5688.1141 in vlan 60 is flapping between port Po1 and port Po3

If the trunks are configured to load-balance by src-MAC the virtual host is not flapping anymore. But i don't get the benefits of the IP-Hash load-balancing method. Does anyone know how great the performance-loss is due to the MAC-flapping?

Backup-Question: Is there a "supported" way to connect two switches to two esxi-hosts (VMware ESXi 6.5) without VDS?