I know that I made some stupid moves to get into this situation, please don't remind me, and don't ask why :-/

I have this Synology DS1515+, with 2x6TB drives in SHR, which means MD RAID1, with LVM on top.

After starting a RAID1 to RAID5 conversion, aborting it and fiddling around with disk, my ext4 file system is unmountable.

Could it be possible, that the system have simply been "confused" by the many reboots and removal of disks, and now treats the entire disk space as a RAID5 volume, even though the conversion from RAID1 to RAID5 was only about 10% completed? If so, do you think that I have a chance of fixing the file system, if I add a third disk and let the RAID array rebuild? Or will it just rebuld to a logical volume with the exact same data as now, i.e. a damaged file system on it?

I'm a little curious about how the actual conversion process goes on, since MD and/or LVM must know which parts of the block devices that should be treated as RAID5 or RAID1, until the entire space is converted to RAID5. Anyone who knows more about this?

Thanks in advance for any help :-)

Here is what I did. (My rescue attempts so far, and log entries are listed below)

Hot-plugged a new 6 TB disk into the NAS.

Told Synology's UI to add the disk to my existing volume and grow it to 12TB (making it into a 3x6TB RAID5)

Shut down the NAS (shutdown -P now) a couple of yours into the growing process, and removed the new drive. The NAS booted well but reported that my volume was degraded. It still reported a 6 TB file system and everything was still accessible.

Hot-plugged disk 3 again, wiped it and made another single disk volume on it.

Shut down the NAS, removed disk 2 (this was a mistake!) and powered it on. It started beeping and told me that my volume was crashed.

Shut the NAS down again and re-inserted the missing disk2. But the Synology still reported the volume as crashed, and offered no repair options.

So, all my data is now unavailable!

I started investigating the issue. It seems like MD is assembling the array as it should:

State : clean, degraded

Active Devices : 2

Working Devices : 2

Failed Devices : 0

Spare Devices : 0

Layout : left-symmetric

Chunk Size : 64K

Name : DiskStation:2 (local to host DiskStation)

UUID : 58290cba:75757ee2:86fe074c:ada2e6d2

Events : 31429

Number Major Minor RaidDevice State

0 8 5 0 active sync /dev/sda5

1 8 21 1 active sync /dev/sdb5

2 0 0 2 removed

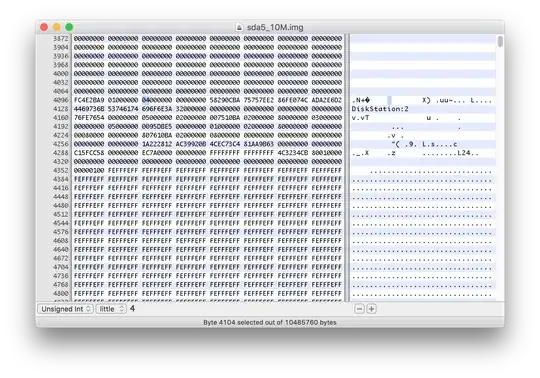

And the metadata on the two original disks also looks fine:

Device Role : Active device 0

Array State : AA. ('A' == active, '.' == missing)

Device Role : Active device 1

Array State : AA. ('A' == active, '.' == missing)

LVM also recognizes the RAID5 volume and expose it's device:

--- Logical volume ---

LV Path /dev/vg1000/lv

LV Name lv

VG Name vg1000

But the file system on /dev/vg1000/lv seems to be damaged, when i try to mount it read-only:

mount: wrong fs type, bad option, bad superblock on /dev/vg1000/lv, missing codepage or helper program, or other error (for several filesystems (e.g. nfs, cifs) you might need a /sbin/mount.<type> helper program)

In some cases useful info is found in syslog - try dmesg | tail or so.

So, here I am with a broken file system, which I believe is not possible to repair (see a list of my attempts below).

Here are the steps that I have tried so far:

Cloned /dev/vg1000/lv to a partition on an empty hard drive and ran e2fsck I had this process running for a week, before I interruppted it. It found million of faulty inodes and multiply-claimed blocks etc. and with that amount of FS errors, I believe that it will not bring back any useful data, even if it completes some day.

Moved the two hard drives with data, into a USB dock, and connected it to a Ubuntu virtual machine, and made overlay devices to catch all writes (using dmsetup)

First, I tried to re-create the raid array. I started by finding the command that created the array with the same parameters and mdadm -E already gave me, and then I tried swithing the order around, to see if the results differed (i.e. sda, missing, sdb, sda, sdb, missing, missing, sdb, sda). 4 out of 6 combinations made LVM detect the volume group, but the file system was still broken.

Used R-Studio to assemble the array and search for file systems

This actually give a few results - it was able to scan and find an EXT4 file system on the RAID volume that I assembled, and I could browse the files, but only a subset (like 10) of my actual files were presented in the file viewer. I tried switching around with the device order, and while 4 of the combinations made R-Studio detect an ext4 file system (just like above), only the original setting (sda, sdb, missing) made R-studio able to discover any files from the root of the drive.

Tried mounting with -o sb=XXXXX, pointing at an alternative superblock

This gave me the same errors as not specifyting the superblock position.

Tried debugfs

This gave me IO errors when I typed "ls"

Here are the log messages for the operations described above, that caused the problems.

Shutting down the system, which were running as a degraded RAID5, with a file system still working.

2017-02-25T18:13:27+01:00 DiskStation umount: kill the process "synoreport" [pid = 15855] using /volume1/@appstore/StorageAnalyzer/usr/syno/synoreport/synoreport

2017-02-25T18:13:28+01:00 DiskStation umount: can't umount /volume1: Device or resource busy

2017-02-25T18:13:28+01:00 DiskStation umount: can't umount /volume1: Device or resource busy

2017-02-25T18:13:28+01:00 DiskStation umount: SYSTEM: Last message 'can't umount /volume' repeated 1 times, suppressed by syslog-ng on DiskStation

2017-02-25T18:13:28+01:00 DiskStation syno_poweroff_task: lvm_poweroff.c:49 Failed to /bin/umount -f -k /volume1

2017-02-25T18:13:29+01:00 DiskStation syno_poweroff_task: lvm_poweroff.c:58 Failed to /sbin/vgchange -an

2017-02-25T18:13:29+01:00 DiskStation syno_poweroff_task: raid_stop.c:28 Failed to mdadm stop '/dev/md2'

2017-02-25T18:13:29+01:00 DiskStation syno_poweroff_task: syno_poweroff_task.c:331 Failed to stop RAID [/dev/md2]

Remark the "failed to stop RAID" - is that a possible cause of the problems?

First boot after removing disk2 (sdb)

2017-02-25T18:15:27+01:00 DiskStation kernel: [ 10.467975] set group disks wakeup number to 5, spinup time deno 1

2017-02-25T18:15:27+01:00 DiskStation kernel: [ 10.500561] synobios: unload

2017-02-25T18:15:27+01:00 DiskStation kernel: [ 10.572388] md: invalid raid superblock magic on sda5

2017-02-25T18:15:27+01:00 DiskStation kernel: [ 10.578043] md: sda5 does not have a valid v0.90 superblock, not importing!

2017-02-25T18:15:27+01:00 DiskStation kernel: [ 10.627381] md: invalid raid superblock magic on sdc5

2017-02-25T18:15:27+01:00 DiskStation kernel: [ 10.633034] md: sdc5 does not have a valid v0.90 superblock, not importing!

2017-02-25T18:15:27+01:00 DiskStation kernel: [ 10.663832] md: sda2 has different UUID to sda1

2017-02-25T18:15:27+01:00 DiskStation kernel: [ 10.672513] md: sdc2 has different UUID to sda1

2017-02-25T18:15:27+01:00 DiskStation kernel: [ 10.784571] Got empty serial number. Generate serial number from product.

2017-02-25T18:15:41+01:00 DiskStation spacetool.shared: raid_allow_rmw_check.c:48 fopen failed: /usr/syno/etc/.rmw.md3

2017-02-25T18:15:41+01:00 DiskStation kernel: [ 31.339243] md/raid:md2: not enough operational devices (2/3 failed)

2017-02-25T18:15:41+01:00 DiskStation kernel: [ 31.346371] md/raid:md2: raid level 5 active with 1 out of 3 devices, algorithm 2

2017-02-25T18:15:41+01:00 DiskStation kernel: [ 31.355295] md: md2: set sda5 to auto_remap [1]

2017-02-25T18:15:41+01:00 DiskStation kernel: [ 31.355299] md: reshape of RAID array md2

2017-02-25T18:15:41+01:00 DiskStation spacetool.shared: spacetool.c:1223 Try to force assemble RAID [/dev/md2]. [0x2000 file_get_key_value.c:81]

2017-02-25T18:15:41+01:00 DiskStation kernel: [ 31.414839] md: md2: reshape done.

2017-02-25T18:15:41+01:00 DiskStation kernel: [ 31.433218] md: md2: set sda5 to auto_remap [0]

2017-02-25T18:15:41+01:00 DiskStation kernel: [ 31.494964] md: md2: set sda5 to auto_remap [0]

2017-02-25T18:15:41+01:00 DiskStation kernel: [ 31.549962] md/raid:md2: not enough operational devices (2/3 failed)

2017-02-25T18:15:41+01:00 DiskStation kernel: [ 31.557093] md/raid:md2: raid level 5 active with 1 out of 3 devices, algorithm 2

2017-02-25T18:15:41+01:00 DiskStation kernel: [ 31.566069] md: md2: set sda5 to auto_remap [1]

2017-02-25T18:15:41+01:00 DiskStation kernel: [ 31.566073] md: reshape of RAID array md2

2017-02-25T18:15:41+01:00 DiskStation spacetool.shared: raid_allow_rmw_check.c:48 fopen failed: /usr/syno/etc/.rmw.md2

2017-02-25T18:15:41+01:00 DiskStation kernel: [ 31.633774] md: md2: reshape done.

2017-02-25T18:15:41+01:00 DiskStation kernel: [ 31.645025] md: md2: change number of threads from 0 to 1

2017-02-25T18:15:41+01:00 DiskStation kernel: [ 31.645033] md: md2: set sda5 to auto_remap [0]

2017-02-25T18:15:41+01:00 DiskStation spacetool.shared: spacetool.c:3023 [Info] Old vg path: [/dev/vg1000], New vg path: [/dev/vg1000], UUID: [Fund9t-vUVR-3yln-QYVk-8gtv-z8Wo-zz1bnF]

2017-02-25T18:15:41+01:00 DiskStation spacetool.shared: spacetool.c:3023 [Info] Old vg path: [/dev/vg1001], New vg path: [/dev/vg1001], UUID: [FHbUVK-5Rxk-k6y9-4PId-cSMf-ztmU-DfXYoL]

2017-02-25T18:22:50+01:00 DiskStation umount: can't umount /volume2: Invalid argument

2017-02-25T18:22:50+01:00 DiskStation syno_poweroff_task: lvm_poweroff.c:49 Failed to /bin/umount -f -k /volume2

2017-02-25T18:22:50+01:00 DiskStation kernel: [ 460.374192] md: md2: set sda5 to auto_remap [0]

2017-02-25T18:22:50+01:00 DiskStation kernel: [ 460.404747] md: md3: set sdc5 to auto_remap [0]

2017-02-25T18:28:01+01:00 DiskStation umount: can't umount /initrd: Invalid argument

Booting again, with the disk2 (sdb) present again

2017-02-25T18:28:17+01:00 DiskStation spacetool.shared: raid_allow_rmw_check.c:48 fopen failed: /usr/syno/etc/.rmw.md3

2017-02-25T18:28:17+01:00 DiskStation kernel: [ 32.442352] md: kicking non-fresh sdb5 from array!

2017-02-25T18:28:17+01:00 DiskStation kernel: [ 32.478415] md/raid:md2: not enough operational devices (2/3 failed)

2017-02-25T18:28:17+01:00 DiskStation kernel: [ 32.485547] md/raid:md2: raid level 5 active with 1 out of 3 devices, algorithm 2

2017-02-25T18:28:17+01:00 DiskStation spacetool.shared: spacetool.c:1223 Try to force assemble RAID [/dev/md2]. [0x2000 file_get_key_value.c:81]

2017-02-25T18:28:17+01:00 DiskStation kernel: [ 32.515567] md: md2: set sda5 to auto_remap [0]

2017-02-25T18:28:18+01:00 DiskStation kernel: [ 32.602256] md/raid:md2: raid level 5 active with 2 out of 3 devices, algorithm 2

2017-02-25T18:28:18+01:00 DiskStation spacetool.shared: raid_allow_rmw_check.c:48 fopen failed: /usr/syno/etc/.rmw.md2

2017-02-25T18:28:18+01:00 DiskStation kernel: [ 32.654279] md: md2: change number of threads from 0 to 1

2017-02-25T18:28:18+01:00 DiskStation spacetool.shared: spacetool.c:3023 [Info] Old vg path: [/dev/vg1000], New vg path: [/dev/vg1000], UUID: [Fund9t-vUVR-3yln-QYVk-8gtv-z8Wo-zz1bnF]

2017-02-25T18:28:18+01:00 DiskStation spacetool.shared: spacetool.c:3023 [Info] Old vg path: [/dev/vg1001], New vg path: [/dev/vg1001], UUID: [FHbUVK-5Rxk-k6y9-4PId-cSMf-ztmU-DfXYoL]

2017-02-25T18:28:18+01:00 DiskStation spacetool.shared: spacetool.c:3030 [Info] Activate all VG

2017-02-25T18:28:18+01:00 DiskStation synovspace: virtual_space_conf_check.c:78 [INFO] "PASS" checking configuration of virtual space [FCACHE], app: [1]

2017-02-25T18:28:18+01:00 DiskStation synovspace: virtual_space_conf_check.c:74 [INFO] No implementation, skip checking configuration of virtual space [HA]

2017-02-25T18:28:18+01:00 DiskStation synovspace: virtual_space_conf_check.c:74 [INFO] No implementation, skip checking configuration of virtual space [SNAPSHOT_ORG]

2017-02-25T18:28:18+01:00 DiskStation synovspace: vspace_wrapper_load_all.c:76 [INFO] No virtual layer above space: [/volume2] / [/dev/vg1001/lv]

2017-02-25T18:28:18+01:00 DiskStation synovspace: vspace_wrapper_load_all.c:76 [INFO] No virtual layer above space: [/volume1] / [/dev/vg1000/lv]

2017-02-25T18:28:19+01:00 DiskStation kernel: [ 33.792601] BTRFS: has skinny extents

2017-02-25T18:28:19+01:00 DiskStation kernel: [ 34.009184] JBD2: no valid journal superblock found

2017-02-25T18:28:19+01:00 DiskStation kernel: [ 34.014673] EXT4-fs (dm-0): error loading journal

mount: wrong fs type, bad option, bad superblock on /dev/vg1000/lv,

missing codepage or helper program, or other error

In some cases useful info is found in syslog - try

dmesg | tail or so.

quotacheck: Mountpoint (or device) /volume1 not found or has no quota enabled.

quotacheck: Cannot find filesystem to check or filesystem not mounted with quota option.

quotaon: Mountpoint (or device) /volume1 not found or has no quota enabled.

2017-02-25T18:28:19+01:00 DiskStation synocheckhotspare: synocheckhotspare.c:149 [INFO] No hotspare config, skip hotspare config check. [0x2000 virtual_space_layer_get.c:98]

2017-02-25T18:28:19+01:00 DiskStation synopkgctl: pkgtool.cpp:3035 package AudioStation is not installed or not operable

Remark how it first says that 1 of 3 devices are present, but afterwards force assembles it, so the RAID array is assembleed, and then tries to mount it but get the EXT4 mounting errors.

Tried to reboot after this experience, did not help

2017-02-25T18:36:45+01:00 DiskStation spacetool.shared: raid_allow_rmw_check.c:48 fopen failed: /usr/syno/etc/.rmw.md3

2017-02-25T18:36:45+01:00 DiskStation kernel: [ 29.579136] md/raid:md2: raid level 5 active with 2 out of 3 devices, algorithm 2

2017-02-25T18:36:45+01:00 DiskStation spacetool.shared: raid_allow_rmw_check.c:48 fopen failed: /usr/syno/etc/.rmw.md2

2017-02-25T18:36:45+01:00 DiskStation kernel: [ 29.629837] md: md2: change number of threads from 0 to 1

2017-02-25T18:36:46+01:00 DiskStation spacetool.shared: spacetool.c:3023 [Info] Old vg path: [/dev/vg1000], New vg path: [/dev/vg1000], UUID: [Fund9t-vUVR-3yln-QYVk-8gtv-z8Wo-zz1bnF]

2017-02-25T18:36:46+01:00 DiskStation spacetool.shared: spacetool.c:3023 [Info] Old vg path: [/dev/vg1001], New vg path: [/dev/vg1001], UUID: [FHbUVK-5Rxk-k6y9-4PId-cSMf-ztmU-DfXYoL]

2017-02-25T18:36:46+01:00 DiskStation spacetool.shared: spacetool.c:3030 [Info] Activate all VG

2017-02-25T18:36:46+01:00 DiskStation spacetool.shared: spacetool.c:3041 Activate LVM [/dev/vg1000]

2017-02-25T18:36:46+01:00 DiskStation spacetool.shared: spacetool.c:3041 Activate LVM [/dev/vg1001]

2017-02-25T18:36:46+01:00 DiskStation spacetool.shared: spacetool.c:3084 space: [/dev/vg1000]

2017-02-25T18:36:46+01:00 DiskStation spacetool.shared: spacetool.c:3084 space: [/dev/vg1001]

2017-02-25T18:36:46+01:00 DiskStation spacetool.shared: spacetool.c:3110 space: [/dev/vg1000], ndisk: [2]

2017-02-25T18:36:46+01:00 DiskStation spacetool.shared: spacetool.c:3110 space: [/dev/vg1001], ndisk: [1]

2017-02-25T18:36:46+01:00 DiskStation spacetool.shared: hotspare_repair_config_set.c:36 Failed to hup synostoraged

2017-02-25T18:36:46+01:00 DiskStation synovspace: virtual_space_conf_check.c:78 [INFO] "PASS" checking configuration of virtual space [FCACHE], app: [1]

2017-02-25T18:36:46+01:00 DiskStation synovspace: virtual_space_conf_check.c:74 [INFO] No implementation, skip checking configuration of virtual space [HA]

2017-02-25T18:36:46+01:00 DiskStation synovspace: virtual_space_conf_check.c:74 [INFO] No implementation, skip checking configuration of virtual space [SNAPSHOT_ORG]

2017-02-25T18:36:46+01:00 DiskStation synovspace: vspace_wrapper_load_all.c:76 [INFO] No virtual layer above space: [/volume2] / [/dev/vg1001/lv]

2017-02-25T18:36:46+01:00 DiskStation synovspace: vspace_wrapper_load_all.c:76 [INFO] No virtual layer above space: [/volume1] / [/dev/vg1000/lv]

2017-02-25T18:36:47+01:00 DiskStation kernel: [ 30.799110] BTRFS: has skinny extents

2017-02-25T18:36:47+01:00 DiskStation kernel: [ 30.956115] JBD2: no valid journal superblock found

2017-02-25T18:36:47+01:00 DiskStation kernel: [ 30.961585] EXT4-fs (dm-0): error loading journal

mount: wrong fs type, bad option, bad superblock on /dev/vg1000/lv,

missing codepage or helper program, or other error

In some cases useful info is found in syslog - try

dmesg | tail or so.

quotacheck: Mountpoint (or device) /volume1 not found or has no quota enabled.

quo