Have a look at EC2 User Data. You could set up something that downloads a script from S3 and runs it.

When you launch an instance in Amazon EC2, you have the option of

passing user data to the instance that can be used to perform common

automated configuration tasks and even run scripts after the instance

starts. You can pass two types of user data to Amazon EC2: shell

scripts and cloud-init directives. You can also pass this data into

the launch wizard as plain text, as a file (this is useful for

launching instances via the command line tools), or as base64-encoded

text (for API calls).

If you are interested in more complex automation scenarios, consider

using AWS CloudFormation and AWS OpsWorks. For more information, see

the AWS CloudFormation User Guide and the AWS OpsWorks User Guide.

I've tried this out myself, it was really easy - it took me about 20 minutes including creating a few instances for testing and writing it up.

Go into IAM and create a new IAM role, or if you already have a role assigned to the EC2 instances edit the current role. Make sure the role allows read only access to S3 - just follow the wizard through. You could use a custom role to allow access to a specific bucket if you like.

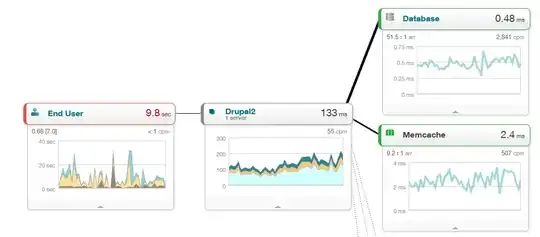

Create a new launch configuration that does two things:

- Specifies this IAM role

- Under advance, have a script in the user data. The script below copies any files and subfolders from an s3 folder to a folder on your new instance. I've provided the script as an image because SF formatting isn't working right. The text version with a few characters missing is below it.

/bin/bash - NB missing #1 at start of line

aws s3 cp s3://s3-bucketname/foldername/ /ec2-target-folder --recursive

You'll have to check into users and permissions yourself as they're different for each setup.