A directory on my FreeBSD 10.2 server somehow got hopelessly corrupted (wasn't ZFS supposed to prevent that?)

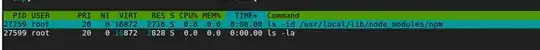

ls or any other command against it leads to current session getting frozen on the kernel level (even SIGKILL does nothing).

ZFS scrub finds no problems.

# zpool status zroot

pool: zroot

state: ONLINE

scan: scrub repaired 0 in 0h17m with 0 errors on Sun Dec 18 18:25:04 2016

config:

NAME STATE READ WRITE CKSUM

zroot ONLINE 0 0 0

gpt/zfs0 ONLINE 0 0 0

errors: No known data errors

smartctl says everything is OK with the disk.

SMART Attributes Data Structure revision number: 16

Vendor Specific SMART Attributes with Thresholds:

ID# ATTRIBUTE_NAME FLAG VALUE WORST THRESH TYPE UPDATED WHEN_FAILED RAW_VALUE

1 Raw_Read_Error_Rate 0x000b 100 100 016 Pre-fail Always - 0

2 Throughput_Performance 0x0005 137 137 054 Pre-fail Offline - 89

3 Spin_Up_Time 0x0007 128 128 024 Pre-fail Always - 314 (Average 277)

4 Start_Stop_Count 0x0012 100 100 000 Old_age Always - 78

5 Reallocated_Sector_Ct 0x0033 100 100 005 Pre-fail Always - 0

7 Seek_Error_Rate 0x000b 100 100 067 Pre-fail Always - 0

8 Seek_Time_Performance 0x0005 142 142 020 Pre-fail Offline - 29

9 Power_On_Hours 0x0012 097 097 000 Old_age Always - 24681

10 Spin_Retry_Count 0x0013 100 100 060 Pre-fail Always - 0

12 Power_Cycle_Count 0x0032 100 100 000 Old_age Always - 78

192 Power-Off_Retract_Count 0x0032 100 100 000 Old_age Always - 306

193 Load_Cycle_Count 0x0012 100 100 000 Old_age Always - 306

194 Temperature_Celsius 0x0002 171 171 000 Old_age Always - 35 (Min/Max 20/46)

196 Reallocated_Event_Count 0x0032 100 100 000 Old_age Always - 0

197 Current_Pending_Sector 0x0022 100 100 000 Old_age Always - 0

198 Offline_Uncorrectable 0x0008 100 100 000 Old_age Offline - 0

199 UDMA_CRC_Error_Count 0x000a 200 200 000 Old_age Always - 0

Even zdb finds nothing wrong.

# zdb -c zroot

Traversing all blocks to verify metadata checksums and verify nothing leaked ...

loading space map for vdev 0 of 1, metaslab 44 of 116 ...

12.2G completed ( 60MB/s) estimated time remaining: 0hr 00min 00sec

No leaks (block sum matches space maps exactly)

bp count: 956750

ganged count: 0

bp logical: 43512090624 avg: 45479

bp physical: 11620376064 avg: 12145 compression: 3.74

bp allocated: 13143715840 avg: 13737 compression: 3.31

bp deduped: 0 ref>1: 0 deduplication: 1.00

SPA allocated: 13143715840 used: 1.32%

additional, non-pointer bps of type 0: 123043

Dittoed blocks on same vdev: 62618

The directory doesn't hold any important data, so I'd be fine if I could just delete it and get to the "clean" state.

One solution that comes to mind is to create a new ZFS pool, copy all the healthy data over, then delete the old one. But that feels very dangerous. What if system hangs and my server goes down?

Can you think of a way I can get rid of the corrupted directory without too much disruption?