At first, I used linux cron to schedule jobs. As jobs and dependencies between them increase, I find it hard to maintain.

For example,

0 4 * * 1-5 run-job-A

10 4 * * 1-5 run-job-B

15 4 * * 1-5 run-job-C

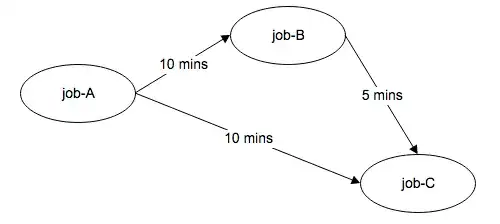

job-B runs after job-A is done, job-C runs after job-A and job-B are both done. I assume job-A can be done in 10 minutes and job-B can be done in 5 minutes. So I let job-B run at 4:10 and job-C run at 4:15.

Job DAG

As you see, I calculate DAG critical path and processing time manually. It's very trivial. And it's easy to make things wrong as these jobs grow.

Is there a better way to schedule these jobs? I am looking for a common, universal tool to handle these jobs.