OK, First thing to understand is why the Log file is the size it is.

You say the Data is 500MB are you sure? thats a very small Data file in comparison to the Log file. If that is true the LOG is the size it is FOR A REASON.

So you have to figure out why..have you been running data into the DB? Any ETL/DTS process running data in, rebuilding Indexes etc and large transactions running? Have you checked that there are no uncommitted transactions? (Select @@trancount)

You can check the current status of the Log by checking log_reuse_wait and log_reuse_wait_desc.

SELECT [log_reuse_wait_desc]

FROM [master].[sys].[databases]

WHERE [name] = N'Company';

The inactive part of the log cannot be truncated until all its log records have been captured in a log backup. This is needed to maintain the log chain a series of log records having an unbroken sequence of log sequence numbers (LSNs). The log is truncated when you back up the transaction log, assuming the following conditions exist:

1.A checkpoint has occurred since the log was last backed up. A checkpoint is essential but not sufficient for truncating the log under the full recovery model or bulk-logged recovery model. After a checkpoint, the log remains intact at least until the next transaction log backup.

2.No other factor is preventing log transaction.

Generally, with regular backups, log space is regularly freed for future use. However, various factors, such as a long-running transaction, can temporarily prevent log truncation.

The BACKUP LOG statement does not specify WITH COPY_ONLY.

Source: https://technet.microsoft.com/en-us/library/ms189085(v=sql.105).aspx

If the log file does need to be that size and you shrink it, it will only grow out again and that in itself is a costly process, as the db is writing the log and needs the file to grow unless you have instant file initialization turned on for the sql server service account the process will wait for the file to be grown and then it will write, if that does it for GB chunks you will have bigger issues to deal with.

So Now you need to look at the activity of the Database, if you are only running one log backup a day for instance that may not be enough for the activity of the DB, in my organisation i take backups every 15 minutes to every 1 hour and in some cases on particular Db's every 5 minutes. this allows the Log to not grow to much (the logs have been presized in some servers) we are also running tight RTO/RPO.

now as joeqwerty has stated, unless you have a tight SLA on that box in regards to recovery points its may not be worth running in FULL or BULK-Logged, your wasting your time, if all you do is run a FULL backup Daily and the company don't care about loss of data, don't fight that, work with it.

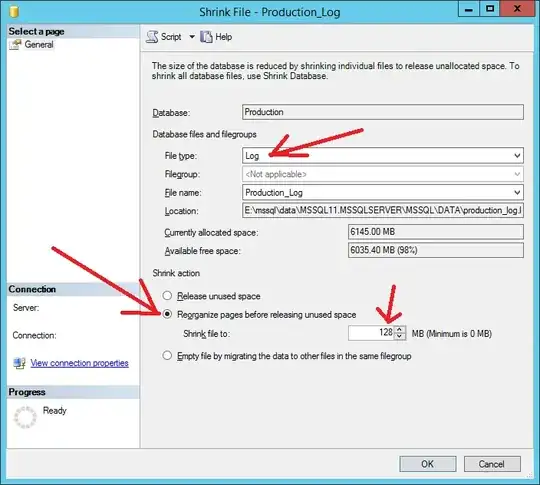

If all you care about is the size of the log, and not about transcriptional point recovery, backup the log and shrink it, and then switch to Simple.

You say don't suggest Simple recovery but I don't think you fully understand what you are doing with FULL recovery.