I have an Nginx+Postgres server with 8 cpu cores. I noticed that under load CPU is used only up to ~60%.

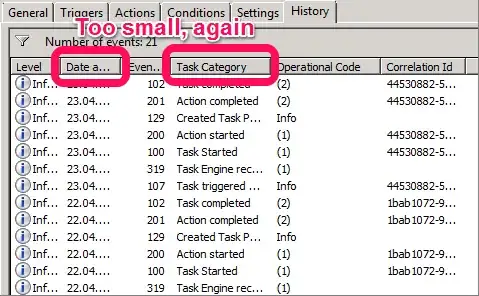

I looked at top and here is a screenshot:

It turns out that only 5 processes are actually run in parallel. What could cause this capping?

I looked at postgres configuration. I didn't notice any settings would cap it off, the settings were not really limiting, for example:

max_connections = 100

Then I checked Nginx configuration. These settings seems relevant (but does not seem to be capping in this case):

worker_processes auto;

worker_connections 768;

In the php settings I looked at this setting and it seems suspicious:

pm.max_children

The pm is set to dynamic. Could this be the limiting factor (I can't blindly test various setting changes and restart this server for experimenting)? If so, how should I choose the value? Set it equal to core count or maybe more?