Yesterday we had some server (or possibly network issues) that escalated into real issues with connectivity (via ssh) and also dns problems.

During this nothing (apart from the above) seemed to be out of the ordinary: all servers responded to ping, no server load was (as far as we could tell) out of the ordinary. Nothing showed in the log files. After a couple of hours this resloved itself and I could access all our servers. Looking at log files, sar activity logs etc revealed nothing.

We have our servers co-located and our partners there looked at switches and firewalls and couldn't see anything out of the ordinary. Network traffic seemd normal, everything responded to pings and traceroute. However: no ssh connections seemed to work!

This is all fact I currently have:

Servers first seemed "slow" to respond to connections via ssh and ftp. Once connected however everything seemed to be fine. All other applications seemed to operate normally. Ping revealed nothing out of the ordinary.

Yesterday at 19:05 DNS lookups stopped working, I can see that in my application log.

I tried to access our servers via ssh a couple of hours and could only access 1 out of 3 servers. Trying to connect seemed to time out and after a minute or so I got:

$ ssh myusername@local_ip_address

Connection closed by <remote ip>

We don't ssh using a domain name so no DNS should be needed here, right? But perhaps the remote server does some kind of remote DNS to verify the connection?

If that's the case it's strange that we had the same issue connecting to two different servers with different DNS setups (see below).

Pinging the other servers worked without problems though. I contacted our our co-locator that manage all equipment, servers, switches and firewalls. They didn't see anything out of the ordinary apart from the fact that they couldn't ssh either. Pinging, network metrics, etc all looked fine.

Then after an hour or so I could once again connect with ssh to both servers that previously didn't respond. Logging in to those and checking system statistics, log files, etc reveals nothing at all!

No what?

I'm in the blind here, where should I look next? I want to know what happened so we can make sure it won't happen again!

If you ask for more information I will provide as much as I can! I've focused on DNS setup etc below because so far that's my only idea right now...

Server setup

This is what our DNS setup looks like on 2 out of 3 servers:

$ more /etc/resolv.conf

nameserver intentionally_changed_server_ip_1

nameserver intentionally_changed_server_ip_2

options rotate

These dns servers are not managed by us but by our co-locator. I've asked them if they had any DNS troubles yesterday but havn't had any answer yet. Will update as soon as I know!

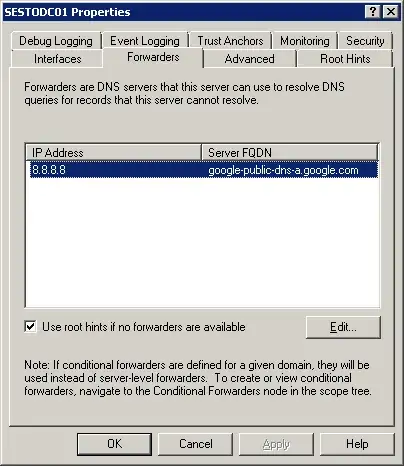

On the third server DNS for some reason points to our own Windows domain controller:

$ more /etc/resolv.conf

nameserver intentionally_changed_server_local_ip_3

Looking at this server it points to Google's DNS:

Running dig as recommended in a comment below returns a rotating number of external nameservers:

$ dig @intentionally_changed_server_ip_1 +short NS ourdomain

ns3.our-co-locators-domain.

ns5.our-co-locators-domain.

ns4.our-co-locators-domain.

$ dig @intentionally_changed_server_ip_1 +short NS ourdomain

ns4.our-co-locators-domain.

ns5.our-co-locators-domain.

ns3.our-co-locators-domain.

Same thing if I target "intentionally_changed_server_ip_2" instead!

Server data

All servers are HP DL 380 G7 servers running RHEL-6:

$ more /etc/redhat-release

Red Hat Enterprise Linux Server release 6.8 (Santiago)