We have a DL180 G6 Server with a P410 RAID Card. The server has the following three RAID arrays.

4x2TB - RAID 10

4x2TB - RAID 10

2x2TB - RAID 1

2x2TB HD's are configured as hot spares for the three arrays.

Following is the relevant output from ESXCLI

Smart Array P410 in Slot 1

Bus Interface: PCI

Slot: 1

Serial Number: PACCR9VYJKGQ

Cache Serial Number: PAAVP9VYJCYN

RAID 6 (ADG) Status: Enabled

Controller Status: OK

Hardware Revision: C

Firmware Version: 2.72

Rebuild Priority: Medium

Expand Priority: Medium

Surface Scan Delay: 15 secs

Surface Scan Mode: Idle

Parallel Surface Scan Supported: No

Queue Depth: Automatic

Monitor and Performance Delay: 60 min

Elevator Sort: Enabled

Degraded Performance Optimization: Disabled

Inconsistency Repair Policy: Disabled

Wait for Cache Room: Disabled

Surface Analysis Inconsistency Notification: Disabled

Post Prompt Timeout: 0 secs

Cache Board Present: True

Cache Status: OK

Cache Ratio: 25% Read / 75% Write

Drive Write Cache: Disabled

Total Cache Size: 512 MB

Total Cache Memory Available: 400 MB

No-Battery Write Cache: Disabled

Cache Backup Power Source: Batteries

Battery/Capacitor Count: 1

Battery/Capacitor Status: OK

SATA NCQ Supported: True

Number of Ports: 2 Internal only

Driver Name: HP HPSA

Driver Version: 6.0.0

PCI Address (Domain:Bus:Device.Function): 0000:06:00.0

Host Serial Number: USE626N2XD

Sanitize Erase Supported: False

Primary Boot Volume: None

Secondary Boot Volume: None

Secondary Boot Volume: None

array A (SATA, Unused Space: 0 MB)

logicaldrive 1 (3.6 TB, RAID 1+0, OK)

physicaldrive 1I:1:9 (port 1I:box 1:bay 9, SATA, 2 TB, OK)

physicaldrive 1I:1:10 (port 1I:box 1:bay 10, SATA, 2 TB, OK)

physicaldrive 1I:1:11 (port 1I:box 1:bay 11, SATA, 2 TB, OK)

physicaldrive 1I:1:12 (port 1I:box 1:bay 12, SATA, 2 TB, OK)

physicaldrive 1I:1:5 (port 1I:box 1:bay 5, SATA, 2 TB, OK, spare)

physicaldrive 1I:1:6 (port 1I:box 1:bay 6, SATA, 2 TB, OK, spare)

array B (SATA, Unused Space: 0 MB)

logicaldrive 2 (3.6 TB, RAID 1+0, OK)

physicaldrive 1I:1:1 (port 1I:box 1:bay 1, SATA, 2 TB, OK)

physicaldrive 1I:1:2 (port 1I:box 1:bay 2, SATA, 2 TB, OK)

physicaldrive 1I:1:3 (port 1I:box 1:bay 3, SATA, 2 TB, OK)

physicaldrive 1I:1:4 (port 1I:box 1:bay 4, SATA, 2 TB, OK)

physicaldrive 1I:1:5 (port 1I:box 1:bay 5, SATA, 2 TB, OK, spare)

physicaldrive 1I:1:6 (port 1I:box 1:bay 6, SATA, 2 TB, OK, spare)

array C (SATA, Unused Space: 0 MB)

logicaldrive 3 (1.8 TB, RAID 1, OK)

physicaldrive 1I:1:7 (port 1I:box 1:bay 7, SATA, 2 TB, OK)

physicaldrive 1I:1:8 (port 1I:box 1:bay 8, SATA, 2 TB, OK)

physicaldrive 1I:1:5 (port 1I:box 1:bay 5, SATA, 2 TB, OK, spare)

physicaldrive 1I:1:6 (port 1I:box 1:bay 6, SATA, 2 TB, OK, spare)

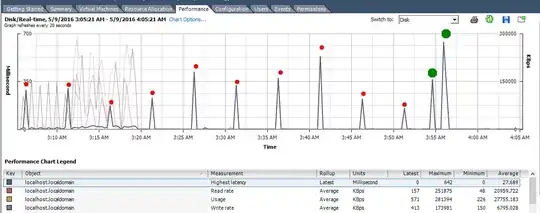

Now in ESXI we are getting the following errors from time to time.

Lost access to volume 5456cb3e-4fbdb59c-a37a- d8d385644ec0 (datastore2) due to connectivity issues. Recovery attempt is in progress

Keep in mind that it is affecting all the three arrays at the same exact time and in a few seconds all three arrays recover. As per understanding, all of the drives are attached to one single port on the P410 RAID card. Do you think that using both ports could improve performance and potentially remove this recurring issue?

We have tried all software solutions at this point including updating the firmware(updated to 6.64). What can be the other options?

Update 1

The two spare drives were configured as spares for all the three arrays as described above. I removed the spares from all the arrays for about 15 minutes and the errors stopped. Now I have configured the first spare for the first array and the second for the second array to see if the error appears again.

Update 2

Reattaching the spares has caused the error to return and it is affecting all the three arrays. So i am removing the spares one by one to further troubleshoot this issue. This is probably a known issue described here: http://community.hpe.com/t5/ProLiant-Servers-ML-DL-SL/ESXi5x-HPSA-P410i-WARNING-LinScsi-SCSILinuxAbortCommands-1843/td-p/6818369. Fingers crossed.