Server Question: I have been working on to increase speed of website of https://www.winni.in and I have came across a very strange phenomena. If anyone could, please explain about this.

I was comparing load time of Winni and Snapdeal, and found out that where Winni takes at an average 450ms in connect time while snapdeal takes at an average just 30ms in connect time. Although both websites are hosted in Singapore region of AWS. I assumed this Connect time is because of latency, as if I access Winni from India or Australia then it falls at around 150ms or less but snapdeal(www.snapdeal.com) is consistently below 30ms no matter from which geographic location you are accessing it.

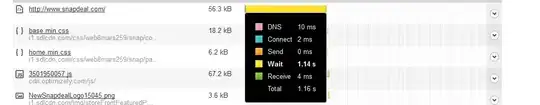

I am attaching, screenshots taken from pingdom for both Winni and Snapdeal showing Connect time when tested from New York. Is there anything in AWS that we are missing which could reduce Connect time or is it because of some server configuration issue.

Winni's Server Stack Is:

- EC2 - Singapore Region SSD Hard Drive 2 Core CPU

- Nginx

- Tomcat