We have inherited a system in which there is a central server for queuing operations. Redis is chosen as queuing agent.

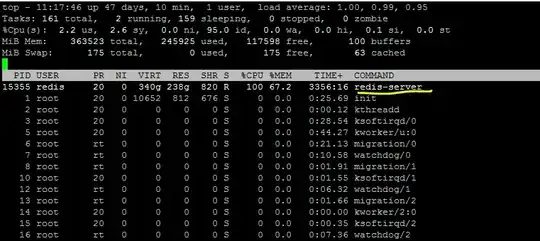

Once in a while (like each 2-3 days) redis service CPU usage goes even upper than 100%.

I tried to read the log to find the cause:

tail /var/log/redis/redis-server.log

But it returns empty result.

I found this article that proposes some stuff. But redis does not answer my commands.

I'm stuck at this point, on what to do and how to find the problem. Also, is there a way to limit the amount of CPU usage of redis?